[Samy Kamkar] is a hardware hacker extraordinaire. This week, he’s joining us on Hackaday.io for this week’s Hack Chat.

Every week, we find someone interesting that makes or breaks the electronic paraphernalia all around us. We sit them down, and get them to spill the beans on how this stuff works, and how we can get our tools and toys to work for everyone. This is the Hack Chat, and it’s happening this Friday, April 7, at noon PDT (20:0 UTC).

Every week, we find someone interesting that makes or breaks the electronic paraphernalia all around us. We sit them down, and get them to spill the beans on how this stuff works, and how we can get our tools and toys to work for everyone. This is the Hack Chat, and it’s happening this Friday, April 7, at noon PDT (20:0 UTC).

Over the years, [Samy] has demonstrated some incredible skills and brought us some incredible hacks. He defeated chip and pin security on a debit card with a coil of wire, exploited locked computers with a USB gadget, and has more skills than the entire DEF CON CFP review board combined. If you want to know about security, [Samy] is the guy you want to talk to.

Here’s How To Take Part:

Our Hack Chats are live community events on the Hackaday.io Hack Chat group messaging.

Our Hack Chats are live community events on the Hackaday.io Hack Chat group messaging.

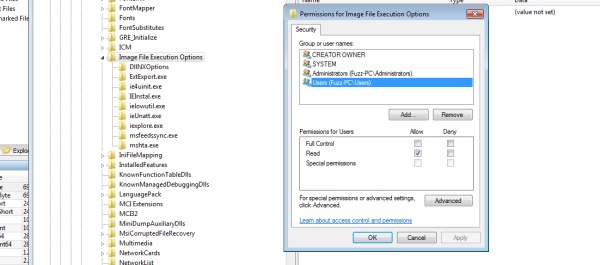

Log into Hackaday.io, visit that page, and look for the ‘Join this Project’ Button. Once you’re part of the project, the button will change to ‘Team Messaging’, which takes you directly to the Hack Chat.

You don’t have to wait until Friday; join whenever you want and you can see what the community is talking about.

Upcoming Hack Chats

We’ve got a lot on the table when it comes to our Hack Chats. On April 14th we’ll be talking custom silicon with SiFive and on April 21st, we’re going to be talking magnets with Nanomagnetics. Making magnets, collecting magnets, playing with magnets, it’ll all be over on the Hack Chat.