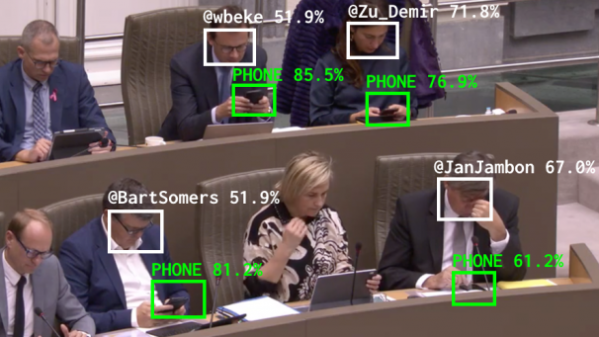

[Dries Depoorter] has a knack for highly technical projects with a solid artistic bent to them, and this piece is no exception. The Flemish Scrollers is a software system that watches live streamed sessions of the Flemish government, and uses Python and machine learning to identify and highlight politicians who pull out phones and start scrolling. The results? Pushed out live on Twitter and Instagram, naturally. The project started back in July 2021, and has been dutifully running ever since, so by now we expect that holding one’s phone where the camera can see it is probably considered a rookie mistake.

This project can also be considered a good example of how to properly handle confidence in results depending on the application. In this case, false negatives (a politician is using a phone, but the software doesn’t detect it properly) are much more acceptable than false positives (a member gets incorrectly identified, or is wrongly called-out for using a mobile device when they are not.)

Keras, an open-source software library, is used for the object detection and facial recognition (GitHub repository for Keras is here.) We’ve seen it used in everything from bat detection to automatic trash sorting, so if you’re interested in machine learning applications, give it a peek.

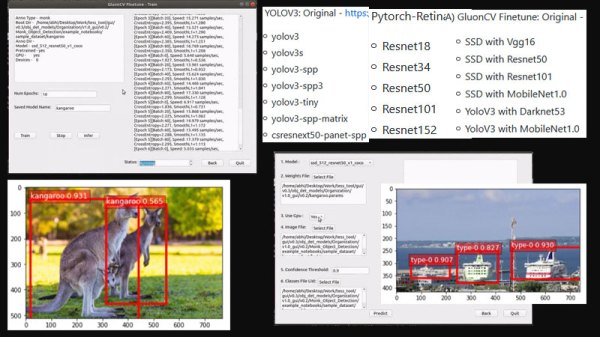

Monk AI is essentially a wrapper for Computer Vision and deep learning experiments. It facilitates users to finetune deep neural networks using transfer learning and is written in Python. Out of the box, it supports Keras and Pytorch and it comes with a few lines of code; you can get started with your very first AI experiment.

Monk AI is essentially a wrapper for Computer Vision and deep learning experiments. It facilitates users to finetune deep neural networks using transfer learning and is written in Python. Out of the box, it supports Keras and Pytorch and it comes with a few lines of code; you can get started with your very first AI experiment.