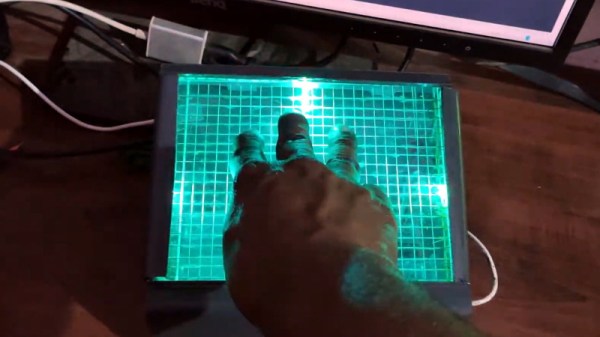

This multi-touch touch panel built by [thiagesh D] might look like it came from the retro-futuristic worlds of Blade Runner or Alien, but thanks to a detailed build video and a fairly short list of required parts, it could be your next weekend project.

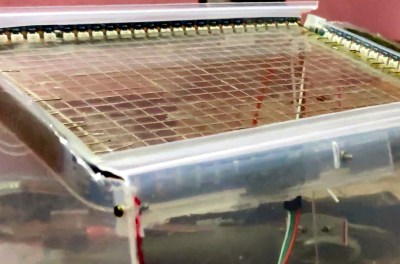

The build starts with a sheet of acrylic, which has a grid pattern etched into it using nothing more exotic than a knife and a ruler. Though if you do have access to some kind of CNC router, this would be a perfect time to break it out. Bare wires are then laid inside the grooves, secured with a healthy application of CA glue, and soldered together to make one large conductive array. This is attached to a capacitive sensor module so it’ll fire off whenever somebody puts a finger on the plastic.

The build starts with a sheet of acrylic, which has a grid pattern etched into it using nothing more exotic than a knife and a ruler. Though if you do have access to some kind of CNC router, this would be a perfect time to break it out. Bare wires are then laid inside the grooves, secured with a healthy application of CA glue, and soldered together to make one large conductive array. This is attached to a capacitive sensor module so it’ll fire off whenever somebody puts a finger on the plastic.

With RGB LED strips added to the edges, you could actually stop here and have yourself a very cool looking illuminated touch sensitive panel. But ultimately, it would just be a glorified button. There’s plenty of interesting applications for such a gadget, but it’s not going to be terribly useful attached to your computer.

To turn this into a viable input device, [thiagesh D] is using a Raspberry Pi and its camera module to track the number and position of fingertips from the other side of the acrylic with Python and OpenCV. His code will even pick up on specific gestures, like a three finger drag which changes the colors of the LEDs accordingly in the video below. The camera’s field of view unfortunately means the box the panel gets mounted to has to be fairly deep, but if recessed into the surface of a desk, we think it could look incredible.

Custom multi-touch panels have been a favorite project of hackers for years now, and we’ve got examples going all the way back to the old black and white days. But larger and more modern incarnations like this one have the potential to change how we interface with technology on a daily basis.

Continue reading “Building A Cyberpunk Multi-Touch Input Device”