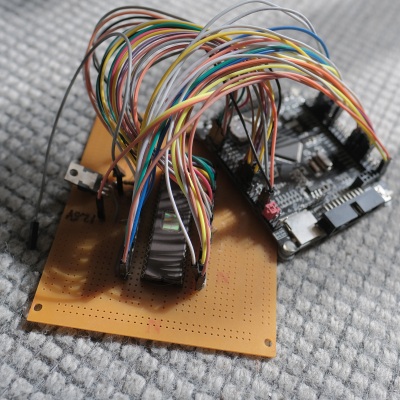

It’s with a tinge of sadness that we and many others reported on the recent move by Zilog to end-of-life the original Z80 8-bit microprocessor. This was the part that gave so many engineers and programmers their first introduction to a computer of their own. Even though now outdated its presence has been a constant over the decades. Zilog will continue to sell a Z80 derivative in the form of their eZ80, but that’s not the only place the core can be found on silicon. [Rejunity] is bringing us an open-source z80 core on real hardware, thanks of course to the TinyTapeout ASIC project. The classic core will occupy two tiles on the upcoming TinyTapeout 7. While perhaps it’s not quite the same as a real 40-pin DIP in your hands, like all of the open-source custom silicon world, it’s as yet early days.

The core in question is derived from the TV80 open-source core, which we would be very interested to compare when fabricated at TinyTapeout’s 130nm process with an original chip from a much larger 1970s process. While It’s true that this project is more of an interesting demonstration of TinyTapeout than a practical everyday Z80, it does at least serve as a reminder that there may be a future point in which a run of open-source real Z80s or other chips might become possible.

This isn’t the first time we’ve featured a TinyTapeout project.