Looking for a first project in a relatively new language that’ll stretch your abilities? [Ron] was, so he hacked a commercially available drone and opened up a lot of its functionality, while writing the client software in Go.

The drone is a DJI Tello, which has some impressive hardware like a 14-core Intel processor and excellent video processing abilities. There’s also a vibrant community and a lot of support, making it the ideal platform for a project like this. It communicates to a base station via WiFi, and using some tools like the Wireshark [Rob] was able to decipher a lot of the communications and create a whole new driver for the drone. While the drone can be controlled in the traditional way, users can also write programs to control the drone as well.

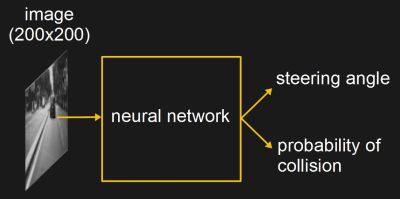

The project is both an impressive feat in reverse engineering an inexpensive drone, and a fun example of programming in the Go language. Because of the fun and excitement of drones, they have become a popular platform on which to hack, from increasing their range to becoming a platform for developing AI.