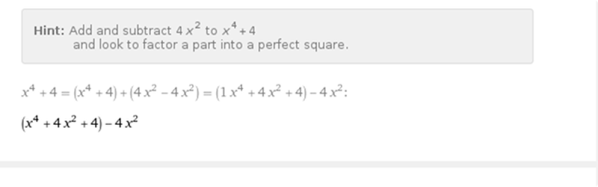

The bane of math students everywhere is the teacher asking for you to show your work. If you’ve grown up where a computer is a normal part of school work, that might annoy you since a lot of tools just give you an answer. We aren’t suggesting you cheat at homework, but we did notice that Wolfram Alpha now shows more of its work when it solves many common math problems.

Granted, the site has always shown work on some problems. However, a recent update shows more intermediate steps and also covers more kinds of problems in a step-by-step format. There are examples, but be aware that for general use, you do need to upgrade to pro (about $6 a month or less if you are student or teacher).