Despite a growing demand for laptop-tablet hybrid computers from producers like Lenovo, HP, and Microsoft, Apple has been stubbornly withdrawn this arena despite having arguably the best hardware and user experiences within the separate domains of laptop and tablet. Charitably one could speculate that this is because Apple’s design philosophy mandates keeping the user experiences of each separate, although a more cynical take might be that they can sell more products if they don’t put all the features their users want into a single device. Either way, for now it seems that if you want a touchscreen MacBook you’ll have to build one yourself like the MacPad from [Federico].

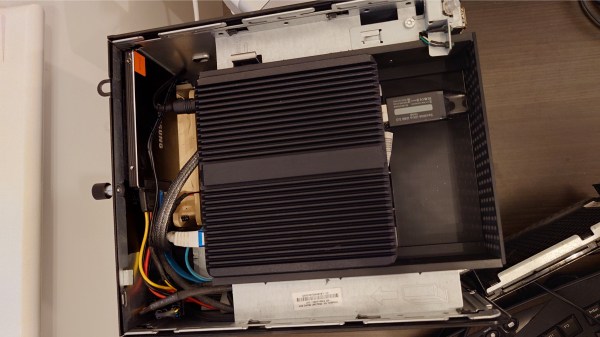

This project started as simply providing a high-quality keyboard and mouse for an Apple Vision Pro, whose internal augmented reality keyboard is really only up to the task of occasionally inputting a password or short string. For more regular computing, [Federico] grabbed a headless MacBook which had its screen removed. This worked well enough that it triggered another line of thought that if it worked for the Vision Pro it might just work for an iPad Pro as well. Using Apple tools like Sidecar makes this almost trivially easy from a software perspective, although setting up the iPad as the only screen, rather than an auxiliary screen, on the MacBook did take a little more customization than normal.

The build goes beyond the software side of setting this up, though. It also includes a custom magnetic mount so that the iPad can be removed at will from the MacBook, freeing both the iPad for times when a tablet is the better tool and the MacBook for when it needs to pull keyboard duty for the Vision Pro. Perhaps the only downsides are that this only works seamlessly when both devices are connected to the same wireless network and that setting up a headless MacBook without a built-in screen takes a bit of extra effort. But with everything online and working it’s nearly the perfect Apple 2-in-1 that users keep asking for. If you’re concerned about the cost of paying for an iPad Pro and a Macbook just to get a touchscreen, though, take a look at this device which adds a touchscreen for only about a dollar.

Thanks to [Stuart] for the tip!