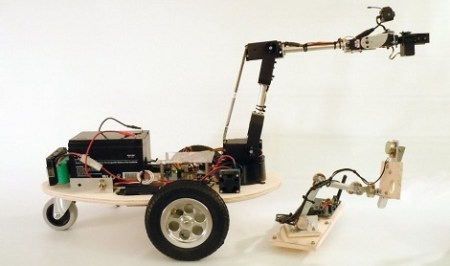

[Dave] posted some pictures and videos of his ‘Nuntius’ robot on the Propeller forums. From the pictures it’s an impressive build, but to really appreciate [Dave]’s skill, check out the Youtube demo.

The controller is a Propeller protoboard with bits of angle aluminum fastened together. Pots are positioned at the joints of the remote’s arm so the robot’s arm can mirror the shape of the remote. We usually see Armatron bots controlled via computer, or in the rare case of human control, a mouse. [Dave]’s build just might be one of the first remote manipulator builds we’ve seen on Hack A Day.

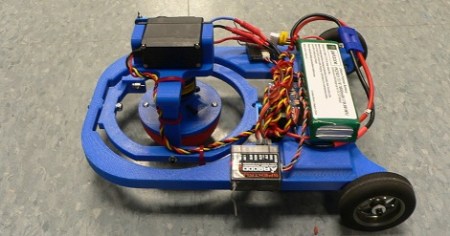

Continue reading “Robotic Gardener Takes Its Cue From Bomb Disposal Bots”