If you know anything about how films are made then you have probably heard about the “green screen” before. The technique is also known as chroma key compositing, and it’s generally used to merge two images or videos together based on color hues. Usually you see an actor filmed in front of a green background. Using video editing software, the editor can then replace that specific green color with another video clip. This makes it look like the actor is in a completely different environment.

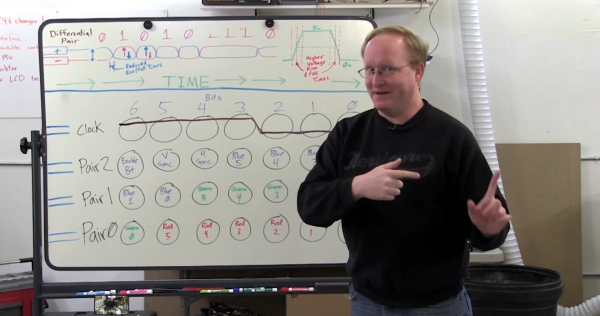

It’s no surprise that with computers, this is a very simple task. Any basic video editing software will include a chroma key function, but have you ever wondered how this was accomplished before computers made it so simple? [Tom Scott] posted a video to explain exactly that.

In the early days of film, the studio could film the actor against an entirely black background. Then, they would copy the film over and over using higher and higher contrasts until they end up with a black background, and a white silhouette of the actor. This film could be used as a matte. Working with an optical printer, the studio could then perform a double exposure to combine film of a background with the film of the actor. You can imagine that this was a much more cumbersome process than making a few mouse clicks.

For the green screen effect, studios could actually use specialized optical filters. They could apply one filter that would ignore a specific wavelength of the color green. Then they could film the actor using that filter. The resulting matte could then be combined with the footage of the actor and the background film using the optical printer. It’s very similar to the older style with the black background.

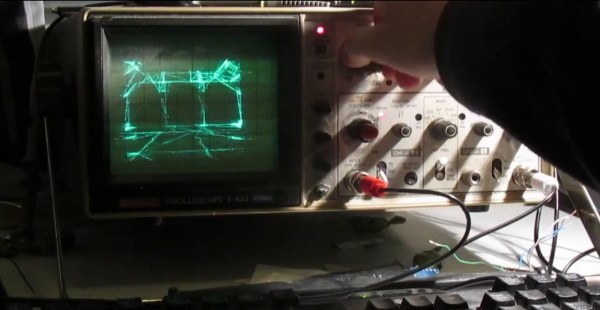

Electronic analog video has some other interesting tricks to perform the same basic effect. [Tom] explains that the analog signal contained information about the various colors that needed to be displayed on the screen. Electronic circuits were built that could watch for a specific color (green) and replace the signal with one from the background video. Studios even went so far as to record both the actor and a model simultaneously, using two cameras that were mechanically linked together to make the same movements. The signals could then be run through this special circuit and the combined image recorded all simultaneously.

There are a few other examples in the video, and the effects that [Tom] uses to describe these old techniques go a long way to help understand the concepts. It’s crazy to think of how complicated this process can be, when nowadays we can do it in minutes with the computers we already have in our homes. Continue reading “How Green Screen Worked Before Computers”