Carnival games are simple to pick up, designed to provide a little bit of entertainment in exchange for your game ticket. Given that the main point is just to have some silly fun with your friends, most game vendors have little reason to innovate. But we are people who play with microcontrollers and gratuitous LEDs. We look at these games and imagine bringing them into the 21st century. Well, there’s good news: the people of Two Bit Circus have been working along these lines, and they’re getting ready to invite the whole world to come and play with them.

“Interactive Entertainment” is how Two Bit Circus describe what they do, by employing the kinds of technology that frequent pages of Hackaday. But while we love hacks for their own sake here, Two Bit Circus applies them to amuse and engage everyone regardless of their technical knowledge. For the past few years they’ve been building on behalf of others for events like trade shows and private parties. Then they worked to put together their own event, a STEAM Carnival to spread love of technology, art, and fun. The problem? They are only temporary and for a limited audience, hence the desire for a permanent facility open to the public. Your Hackaday scribe had the opportunity to take a peek as they were putting on the finishing touches.

Continue reading “Two Bit Circus Took The Tech We Love And Built An Amusement Park”

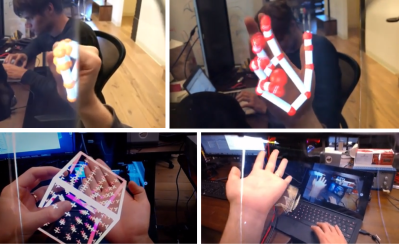

Now that we’ve got you excited, let’s mention what the North Star is not — it’s not a consumer device. Leap Motion’s idea here was to create a platform for developing Augmented Reality experiences — the user interface and interaction aspects. To that end, they built the best head-mounted display they could on a budget. The company started with standard 5.5″ cell phone displays, which made for an incredibly high resolution but low framerate (50 Hz) device. It was also large and completely unpractical.

Now that we’ve got you excited, let’s mention what the North Star is not — it’s not a consumer device. Leap Motion’s idea here was to create a platform for developing Augmented Reality experiences — the user interface and interaction aspects. To that end, they built the best head-mounted display they could on a budget. The company started with standard 5.5″ cell phone displays, which made for an incredibly high resolution but low framerate (50 Hz) device. It was also large and completely unpractical.