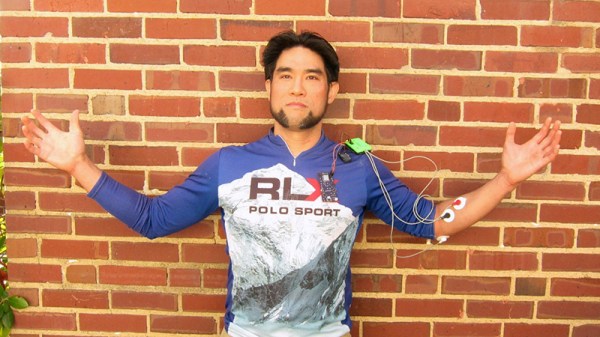

SHE BON (that’s the French bon, or “good”) is an ambitious project by [Sarah Petkus] that consists of a series of wearable electronic and mechanical elements which all come together as a system for a single purpose: to sense and indicate female arousal. As a proponent of increased discussion and openness around the topic of sexuality, [Sarah]’s goal is to take something hidden and turn it into something obvious and overt, while giving it a certain artful flair in the process.

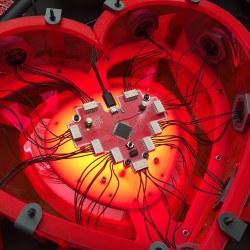

The core of the system is a wearable backpack in the shape of a heart, from which all other sensors and feedback elements are connected. A lot of thought has gone into the design of the system, ensuring that the different modules have an artistic angle to their feedback while also being comfortable to actually wear, and [Sarah] seems to have a knack for slick design. Some of the elements are complete and some are still in progress, but the system is well documented with a clear vision for the whole. It’s an unusual and fascinating project, and was one of the finalists selected in the Human Computer Interface portion of the 2018 Hackaday Prize. Speaking of which, the Musical Instrument Challenge is underway, so be sure check it out!

The core of the system is a wearable backpack in the shape of a heart, from which all other sensors and feedback elements are connected. A lot of thought has gone into the design of the system, ensuring that the different modules have an artistic angle to their feedback while also being comfortable to actually wear, and [Sarah] seems to have a knack for slick design. Some of the elements are complete and some are still in progress, but the system is well documented with a clear vision for the whole. It’s an unusual and fascinating project, and was one of the finalists selected in the Human Computer Interface portion of the 2018 Hackaday Prize. Speaking of which, the Musical Instrument Challenge is underway, so be sure check it out!