We’re all pretty familiar with AI’s ability to create realistic-looking images of people that don’t exist, but here’s an unusual implementation of using that technology for a different purpose: masking people’s identity without altering the substance of the image itself. The result is the photo’s content and “purpose” (for lack of a better term) of the image remains unchanged, while at the same time becoming impossible to identify the actual person in it. This invites some interesting privacy-related applications.

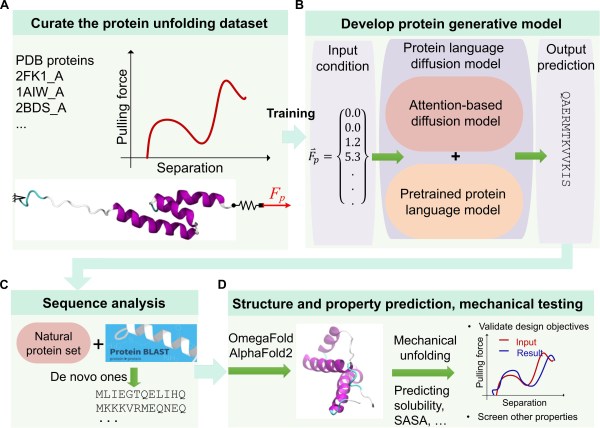

The paper for Face Anonymization Made Simple has all the details, but the method boils down to using diffusion models to take an input image, automatically pick out identity-related features, and alter them in a way that looks more or less natural. For this purpose, identity-related features essentially means key parts of a human face. Other elements of the photo (background, expression, pose, clothing) are left unchanged. As a concept it’s been explored before, but researchers show that this versatile method is both simpler and better-performing than others.

Diffusion models are the essence of AI image generators like Stable Diffusion. The fact that they can be run locally on personal hardware has opened the doors to all kinds of interesting experimentation, like this haunted mirror and other interactive experiments. Forget tweaking dull sliders like “brightness” and “contrast” for an image. How about altering the level of “moss”, “fire”, or “cookie” instead?