Students from the ECE4760 program at Cornell have been working on a spatial audio system built into a hat. The project from [Anishka Raina], [Arnav Shah], and [Yoon Kang], enables the wearer to get a sense of the direction and proximity of objects in the immediate vicinity with the aid of audio feedback.

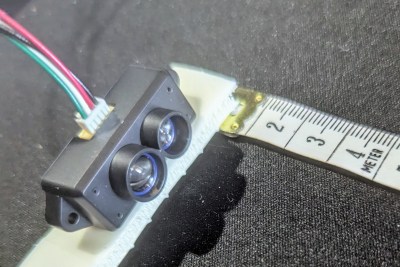

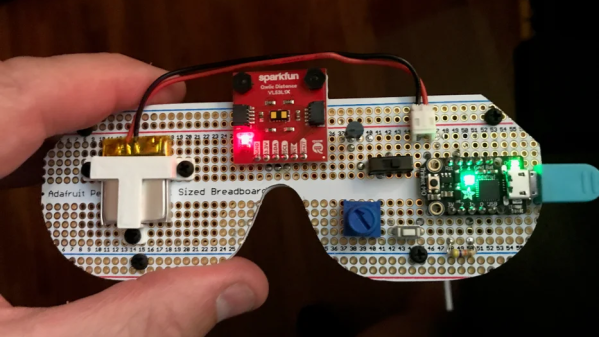

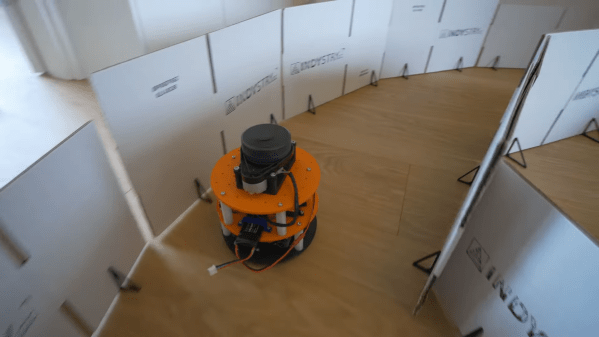

The heart of the build is a Raspberry Pi Pico. It’s paired with a TF-Luna LiDAR sensor which is used to identify the range to objects around the wearer. The sensor is mounted on a hat, so the wearer can pan the sensor from side to side to scan the immediate area for obstacles. Head tracking wasn’t implemented in the project, so instead, the wearer uses a potentiometer to indicate to the microcontroller the direction they are facing as they scan. The Pi Pico then takes the LIDAR scan data, determines the range and location of any objects nearby, and creates a stereo audio signal which indicates to the wearer how close those objects are and their relative direction using a spatial audio technique called interaural time difference (ITD).

It’s a neat build that provides some physical sensory augmentation via the human auditory system. We’ve featured similar projects before, too.