Our smartphones are incredibly powerful computers in their own right, yet we don’t often see them directly integrated into projects. Intel Intelligent Systems Lab has done exactly that with the release OpenBot, an open source smartphone based self-driving robot.

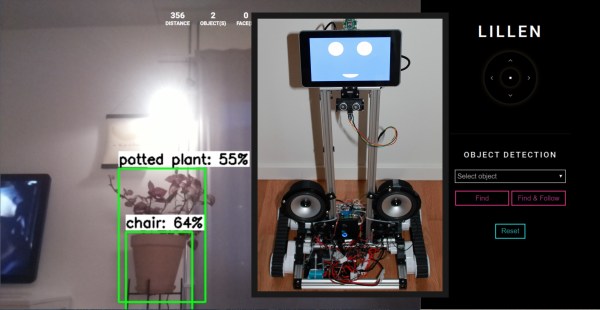

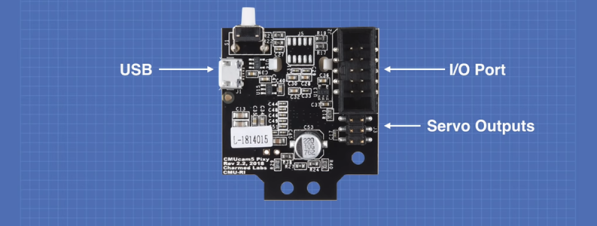

Most of the magic happens on the smartphone, which runs an app built on TensorFlow Lite, and integrates the camera and array of sensors on the smartphone, as well as the data from ultrasonic sensors and wheel encoders on the robot. The robot itself is relatively simple, with four geared DC motors, motor drivers wired to an Arduino Nano that interfaces with an Android Phone over serial.

The app created by the Intel ISL team comes preloaded with three AI models that can do either person following, or two different modes of autonomous navigation. By connecting a Bluetooth controller to the smartphone and drive the robot around manually in your specific environment while collecting data, you can train a custom autonomous driving policy to suit your environment.

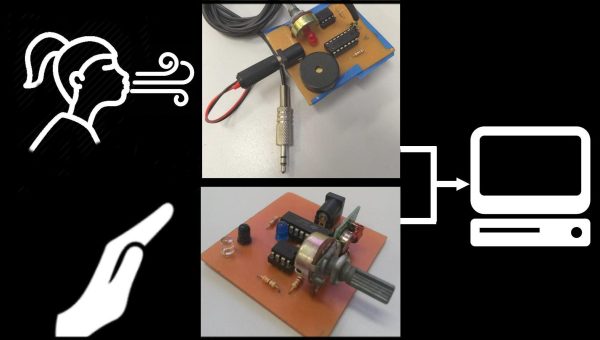

This looks like an excellent way to get a taste of autonomous robots on a small budget, while still being a viable base for more demanding applications. We’ve seen only a few smartphone based robots like DriveMyPhone and SmartiPresense, which don’t have AI capabilities, but are intended for telepresence applications. We’ve always wondered why we don’t see more projects with cellphones, so we welcome the example.

Continue reading “Open Source Self-Driving Smartphone Robot”