Retiring to the garden for a few reflective puffs on the meerschaum and a quick shufti through the Racing Post, and the peace of the afternoon is shattered by the buzz of a drone in the old airspace,what! What’s a chap to do, let loose with both barrels of the finest birdshot from the trusty twelve-bore? Or build a missile battery cunningly concealed in a dovecote? The latter is what [secretbatcave] did to protect his little slice of England, and while we’re not sure of its efficacy we’re still pretty taken with it. After all, who wouldn’t want a useless garden accoutrement that conceals a fearsome 21st century defence system?

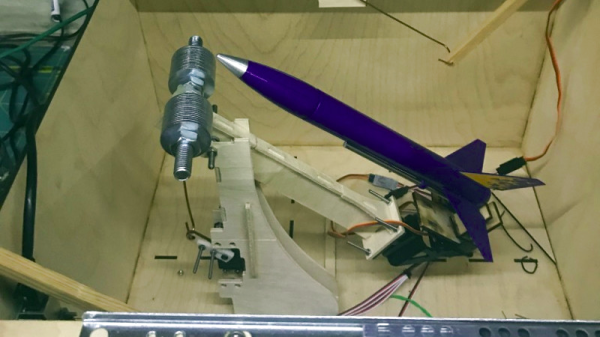

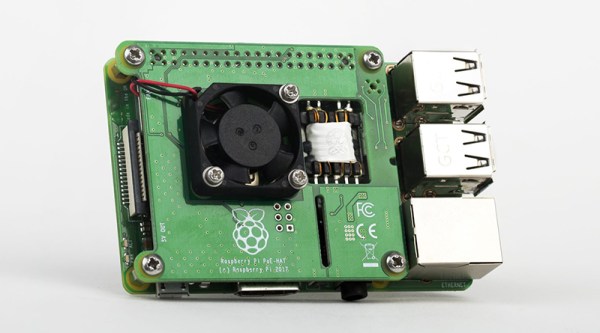

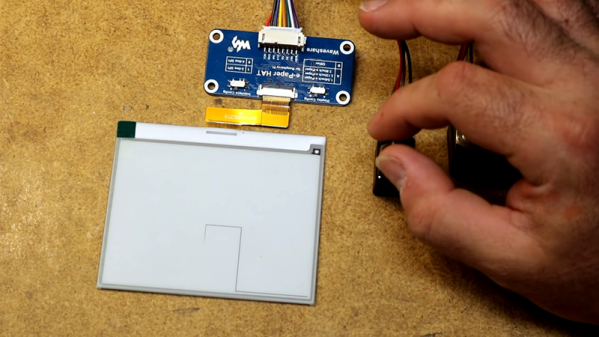

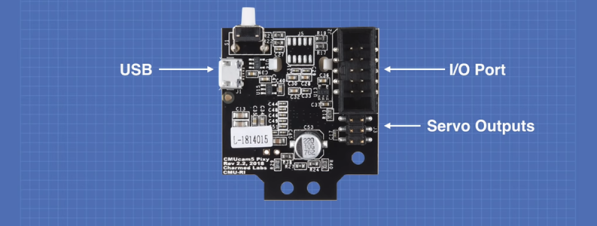

The basic shell of the dovecote is made from laser cut ply, in the shape of an innocuous miniature house. The roof is in two sliding sections which glide apart upon servo-controlled drawer runners, and concealed within is the rocket launcher itself on a counterweighted arm to lift it through the opening. The (toy) rocket itelf is aimed with a camera pan/tilt mechanism,and the whole is under the control of a Raspberry Pi

It’s understood that this is a rather tongue-in-cheek project, and the chances of any multirotors falling out of the sky are somewhat remote. But it does serve also to bring a bit of light back onto a theme Hackaday have touched upon in previous years, that of the sometimes uneasy relationship between drone and public.