Have you ever tired of playing with your latest robot invention and wished you could just eat it? Well, that’s exactly what a team of researchers is investigating. There is a fully funded research initiative (not an April Fools’ joke, as far as we know) delving into the possibilities of edible electronics and mechanical systems used in robotics. The team, led by EPFL in Switzerland, combines food process engineering, printed and molecular electronics, and soft robotics to create fully functional and practical robots that can be consumed at the end of their lifespan. While the concept of food-based robots may seem unusual, the potential applications in medicine and reducing waste during food delivery are significant driving factors behind this idea.

their lifespan. While the concept of food-based robots may seem unusual, the potential applications in medicine and reducing waste during food delivery are significant driving factors behind this idea.

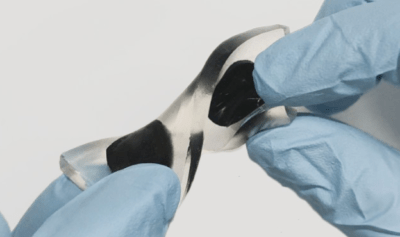

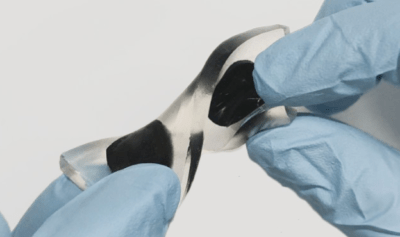

The Robofood project (some articles are paywalled!) has clearly made some inroads into the many components needed. Take, for example, batteries. Normally, ingesting a battery would result in a trip to the emergency room, but an edible battery can be made from an anode of riboflavin (found in almonds and egg whites) and a cathode of quercetin, as we covered a while ago. The team proposed another battery using  activated charcoal (AC) electrodes on a gelatin substrate. Water is split into its constituent oxygen and hydrogen by applying a voltage to the structure. These gasses adsorb into the AC surface and later recombine back into the water, providing a usable one-volt output for ten minutes with a similar charge time. This simple structure is reusable and, once expired, dissolves harmlessly in (simulated) gastric fluid in twenty minutes. Such a device could potentially power a GI-tract exploratory robot or other sensor devices.

activated charcoal (AC) electrodes on a gelatin substrate. Water is split into its constituent oxygen and hydrogen by applying a voltage to the structure. These gasses adsorb into the AC surface and later recombine back into the water, providing a usable one-volt output for ten minutes with a similar charge time. This simple structure is reusable and, once expired, dissolves harmlessly in (simulated) gastric fluid in twenty minutes. Such a device could potentially power a GI-tract exploratory robot or other sensor devices.

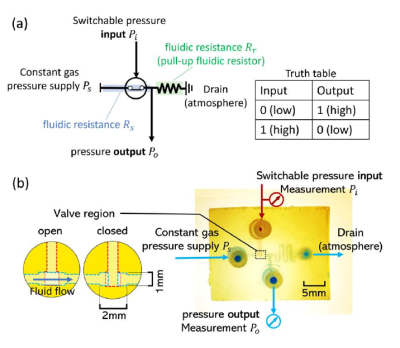

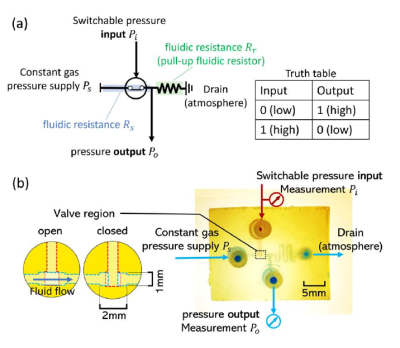

But what use is power without control? (as some car tyre advert once said) Microfluidic control circuits can be created using a stack of edible materials, primarily oleogels, like ethyl cellulose, mixed with an organic oil such as olive oil. A microfluidic NOT gate combines a pressure-controlled switch with a fluid resistor as the ‘pull-up’. The switch has a horizontal flow channel with a blockage that is cleared when a control pressure is applied. As every electronic engineer knows, once you have a controlled switch and a resistor, you can build NOT gates and all the other logic functions, flip-flops, and memories. Although they are very slow, the control components are importantly edible.

Edible electronics don’t feature here often, but we did dig up this simple edible chocolate bunny that screams when you bite it. Who wouldn’t want one of those?

their lifespan. While the concept of food-based robots may seem unusual, the potential applications in medicine and reducing waste during food delivery are significant driving factors behind this idea.

their lifespan. While the concept of food-based robots may seem unusual, the potential applications in medicine and reducing waste during food delivery are significant driving factors behind this idea. activated charcoal (AC) electrodes on a gelatin substrate. Water is split into its constituent oxygen and hydrogen by applying a voltage to the structure. These gasses adsorb into the AC surface and later recombine back into the water, providing a usable one-volt output for ten minutes with a similar charge time. This simple structure is reusable and, once expired, dissolves harmlessly in (simulated) gastric fluid in twenty minutes. Such a device could potentially power a GI-tract exploratory robot or other sensor devices.

activated charcoal (AC) electrodes on a gelatin substrate. Water is split into its constituent oxygen and hydrogen by applying a voltage to the structure. These gasses adsorb into the AC surface and later recombine back into the water, providing a usable one-volt output for ten minutes with a similar charge time. This simple structure is reusable and, once expired, dissolves harmlessly in (simulated) gastric fluid in twenty minutes. Such a device could potentially power a GI-tract exploratory robot or other sensor devices.