This fantastically huge housing was put together by [Ed Sauer]. He put it together using TIG welded 6061 aluminum for the body and machined the port mount out of 7075 aluminum. The lens port is a commercial unit from a housing manufacturer along with a few manual controls. He wrote up the build in this pdf.

video357 Articles

Hackit: The Bronco Table

While attending LA SIGGRAPH Maker Night, we got to talk to [Brett Doar] about his Bronco Table. The table is meant to make life more difficult by bucking off anything that’s set on top of it. Right now, it uses a tiny piezo mic to listen for the impact and then drives three leg motors in a random pattern. He envisions later generations either running away or following you intently when something is set on them.

The main problem with the current design is that you have to hit the table hard enough to make a noise the mic can pick up. The ideal solution would be able to detect anything, no matter what the material or how forcefully it was set down. How would you detect objects being placed on the surface (table doesn’t have to be wood)?

Hacker Conference Videos

Almost every security conference we’ve attended in the last year has uploaded videos from their speaker tracks. Explore the archives below, and you’re bound to find an interesting talk.

- Defcon 15, Las Vegas, NV

- ToorCon 9, San Diego, CA

- 24C3, Berlin, Germany

- ShmooCon 2008, Washington D.C.

- Notacon 5, Cleveland, OH

- LayerOne 2008, Pasadena, CA

[thanks, Dan]

[photo: ario_j]

Water Runner Robot

Researchers at Carnegie Mellon University’s NanoRobotics Lab have developed a robot that is capable of running on the surface of a pool of water. Like their wall climbing Waalbot, the Water Runner was inspired by the abilities of a lizard, in this case, the basilisk. The team studied the motions of the basilisk and found morphological features and aspects of the lizard’s stride that make running on water possible. Both the lizard and the robot run on water by slapping the surface to create an air cavity like the one above, then push against the water for the necessary lift and thrust. Several prototypes have been built, and there are variants with 2 or 4 legs and with on and off-board power sources. You can see a slow motion video of the robot’s movement below.

The purpose of their research is to create robots that can traverse any surface on earth and waste less energy to viscous drag than a swimming robot would. Though another of the team’s goals is to further legged robot research, the Water Runner is not without potential practical applications. It could be used to collect water samples, monitor waterways with a camera, or even deliver small packages. Download the full abstract in PDF format for more information.

Singing Tesla Coils

The video above is ArcAttack! playing the classic “Popcorn” through their signature Tesla coils. Solid state Tesla coils (SSTC) can generate sound using what [Ed Ward] calls pulse repetition frequency (PRF) modulation. The heat generated by the plasma flame causes rapid expansion of the surrounding air and a resulting soundwave. An SSTC can be operated at just about any frequency, so you just need to build a controller to handle it. The task is made more difficult because very few electronics are stable in such an intense EM field. [Ed] constructed a small Faraday cage for his microcontroller and used optical interconnects to deliver the signals to the Tesla coils.

[via Laughing Squid]

Stabilized Video Collages

This is some beautiful work. The clip features multiple video streams stabilized and then assembled into a whole. First, [ibftp] used the “Stabilize” feature in Motion 3 (part of Apple’s Final Cut Studio 2) to remove the camera shake from the clips. Then he was able to blend the videos with “fusion” set to “multiplication”. If you’ve got access to the tools, this shouldn’t be too hard to do yourself. We’re certain someone in SIGGRAPH is already attempting to do the same thing live. If you want to see image stabilization really making a difference, have a look at the stabilized version of the Zapruder film embedded below.

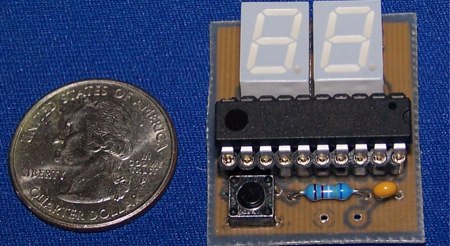

Perceptual Chronograph

All praise to [Limor] for uncovering this incredibly odd project. [magician]’s perceptual chronograph is designed to test whether time “slows down” in stressful situations. The device flashes a random number on the display very quickly so that it is impossible to perceive what is actually being displayed. If you can read the number while under stress, it means that your ability perceive time has increased. It’s hard to believe, but check out the video embedded after the break that investigates the phenomenon. We can’t help, but wonder how [magician] personally plans on testing this.