Bad news, Martian helicopter fans: Ingenuity, the autonomous helicopter that Perseverance birthed onto the Martian surface a few days ago, will not be taking the first powered, controlled flight on another planet today as planned. We’re working on a full story so we’ll leave the gory details for that, but the short version is that while the helicopter was undergoing a full-speed rotor test, a watchdog timer monitoring the transition between pre-flight and flight modes in the controller tripped. The Ingenuity operations team is going over the full telemetry and will reschedule the rotor test; as a result, the first flight will occur no earlier than Wednesday, April 14. We’ll be sure to keep you posted.

Anyone who has ever been near a refinery or even a sewage treatment plant will have no doubt spotted flares of waste gas being burned off. It can be pretty spectacular, like an Olympic torch, but it also always struck us as spectacularly wasteful. Aside from the emissions, it always seemed like you could at least try to harness some of the energy in the waste gasses. But apparently the numbers just never work out in favor of tapping this source of energy, or at least that was the case until the proper buzzword concentration in the effluent was reached. With the soaring value of Bitcoin, and the fact that the network now consumes something like 80-TWh a year, building portable mining rigs into shipping containers that can be plugged into gas flaring stacks at refineries is now being looked at seriously. While we like the idea of not wasting a resource, we have our doubts about this; if it’s not profitable to tap into the waste gas stream to produce electricity now, what does tapping it to directly mine Bitcoin really add to the equation?

What would you do if you discovered that your new clothes dryer was responsible for a gigabyte or more of traffic on your internet connection every day? We suppose in this IoT world, such things are to be expected, but a gig a day seems overly chatty for a dryer. The user who reported this over on the r/smarthome subreddit blocked the dryer at the router, which was probably about the only realistic option short of taking a Dremel to the WiFi section of the dryer’s control board. The owner is in contact with manufacturer LG to see if this perhaps represents an error condition; we’d actually love to see a Wireshark dump of the data to see what the garrulous appliance is on about.

As often happens in our wanderings of the interwebz to find the very freshest of hacks for you, we fell down yet another rabbit hole that we thought we’d share. It’s not exactly a secret that there’s a large number of “Star Trek” fans in this community, and that for some of us, the way the various manifestations of the series brought the science and technology of space travel to life kick-started our hardware hacking lives. So when we found this article about a company building replica Tricorders from the original series, we followed along with great interest. What we found fascinating was not so much the potential to buy an exact replica of the TOS Tricorder — although that’s pretty cool — but the deep dive into how they captured data from one of the few remaining screen-used props, as well as how the Tricorder came to be.

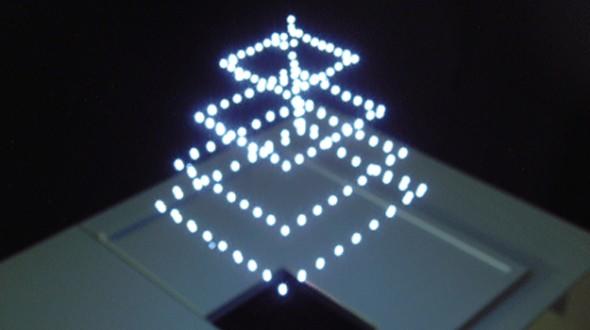

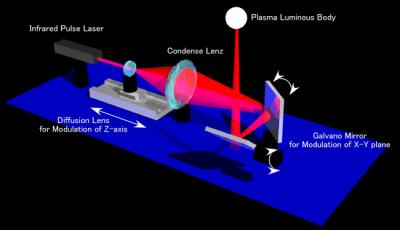

And finally, what do you do if you have 3,281 drones lying around? Obviously, you create a light show to advertise the launch of a luxury car brand in China. At least that’s what Genesis, the luxury brand of carmaker Hyundai, did last week. The display, which looks like it consisted mostly of the brand’s logo whizzing about over a cityscape, is pretty impressive, and apparently set the world record for such things, beating out the previous attempt of 3,051 UAVs. Of course, all the coverage we can find on these displays concentrates on the eye-candy and the blaring horns of the soundtrack and gives short shrift to the technical aspects, which would really be interesting to dive into. How are these drones networked? How do they deal with latency? Are they just creating a volumetric display with the drones and turning lights on and off, or are they actually moving drones around to animate the displays? If anyone knows how these things work, we’d love to learn more, and perhaps even do a feature article.