Microsoft’s Kinect may not have found success as a gaming peripheral, but recognizing that a depth sensor is too cool to leave for dead, development continued even after Xbox gaming peripherals were discontinued. This week their latest iteration emerged and we can get it in the form of Azure Kinect DK. This is a developer’s kit focused on exploring new applications for this technology, not a gaming peripheral we had to hack before we could use in our own projects.

Packaged into a peripheral that plugs into a PC via USB-C, it is more than the core depth sensor module announced last year but less than a full consumer product. Browsing its 10-page specification (PDF) with comparisons to second generation Kinect sensor bar, we see how this technology has evolved. Physical size and weight has dropped, as has power consumption. Auxiliary capabilities has improved with an expanded microphone array, IMU with gyro in addition to accelerometer, and the RGB camera has been upgraded to 4K resolution.

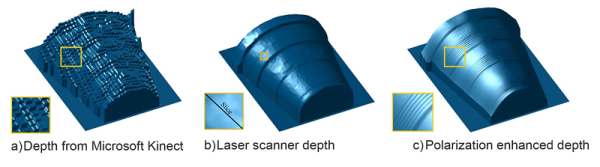

But the star of the show is a new continuous-wave time-of-flight depth sensor, presented at the 2018 IEEE ISSCC conference. (Full text requires IEEE membership, but a digest form is available via ResearchGate.) Among its many advancements, we expect the biggest impact to be its field of view. Default of 75 x 65 degrees is already better than its predecessors (64 x 45 for first generation Kinect, 70 x 60 for second) but there is an option to trade resolution for coverage by switching to a wide-angle mode of 120 x 120 degrees. Significantly wider than other depth cameras like Intel’s RealSense D400 series or Occipital’s Structure.

Another interesting feature is built-in synchronization. Many projects using multiple Kinect sensors ran into problems because they interfered with each other. People hacked around the problem, of course, but now they don’t have to: commodity 3.5 mm jacks allow multiple Azure Kinect DK to be daisy chained together so they play nicely and take turns.

From its name we were worried this product would require Microsoft’s Azure cloud service in some way and be crippled without it. Based on information released so far, it appears developers have access to all the same data streams as previous sensors. Azure tie-in takes the form of optional SDKs that make it easier to do things like upload data for processing in Azure cloud-based recognition services.

And finally, Azure Kinect DK’s price tag of $399 is significantly higher than a Kinect game peripheral, but it is a low volume product for developers. Perhaps high volume consumer products built on this technology will cost less, but that remains to be seen. In the meantime, you have alternative tools for solving similar problems. For example if you are building your own AR headset, you might use Intel’s latest RealSense camera for vision based inside-out motion tacking.