When you think of a robot getting around, you probably think of something on wheels or tracks. Maybe you think about a bipedal walking robot, more common in science fiction than our daily lives. In any case, researchers went way outside the norm when they built an avocado-shaped robot for exploring the rainforest.

The robot is the work of doctoral students at ETH Zurich, working with the Swiss Federal Institute for Forest, Snow, and Landscape research. The design is optimized for navigating the canopy of the rainforest, where a lot of the action is. Traditional methods of locomotion are largely useless up high in the trees, so another method was needed.

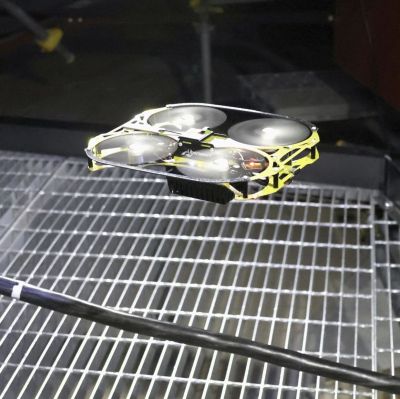

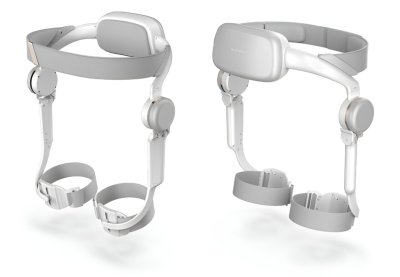

The avocado robot is instead tethered to a cable which is affixed to a high branch on a tree, or even potentially a drone flying above. The robot then uses a winch to move up and down as needed. A pair of ducted fans built into the body provide the thrust necessary to rotate and pivot around branches or other obstacles as it descends. It also packs a camera onboard to help it navigate the environment autonomously.

It’s an oddball design, but it’s easy to see how this design makes sense for navigating the difficult environment of a dense forest canopy. Sometimes, intractable problems require creative solutions. Continue reading “Avocado-Shaped Robot Makes Its Way Through The Rainforest”