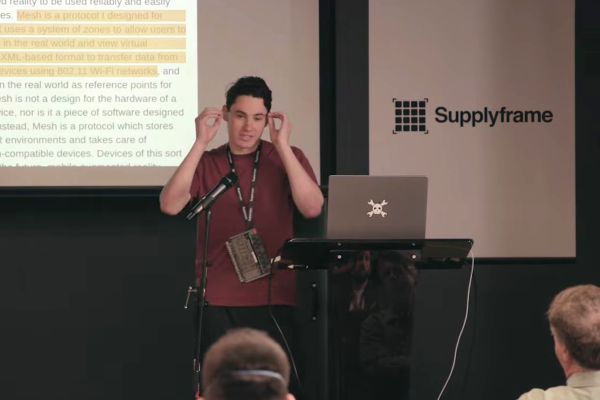

Unsatisfied with commercial VR headset options, [dragonskyrunner] did what any enterprising hacker would: gathered parts over time and ultimately made their own. Behold the Hades Widebody (HWD), a DIY PC VR headset that aims for a wide field of view and even manages to integrate some face and eye tracking.

[dragonskyrunner] is — and we quote — “NEVER building one of these again.” The reason is easily relatable to anyone who has spent a lot of time and effort creating something special: it does the job it was created for, but it also has limitations and is a lot of work. If one were to do it all over again, there would be a host of improvements and changes to consider. But one won’t be doing it all over again any time soon because it’s done now.

The good news is that [dragonskyrunner] made an effort to document things, so there is at least a parts list and enough details for any suitably motivated hacker to replicate the work and perhaps even put their own spin on it.

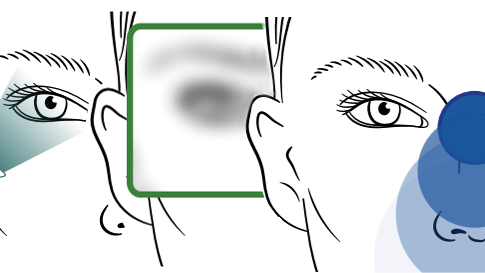

The Hades Widebody has a dual-lens arrangement and wide displays that aim to provide a wider field of view than most setups allow. There’s a main lens in front of the user’s eyes and a cut Fresnel lens providing a sort of extension to the side. [dragonskyrunner] claims that while there is certainly not a seamless transition between the lens elements, it does a better job than an Ambilight at providing a sense of visuals extending into the wearer’s peripheral vision.

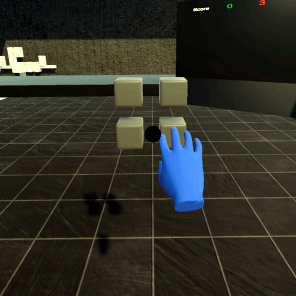

The DIY spirit of making a piece of hardware to suit one’s own needs is exactly the sort of thing that would fit into our 2023 Cyberdeck content, and while a headset by itself isn’t quite enough to qualify (devices must have some form of usable input and output), it just might get those creative juices flowing.