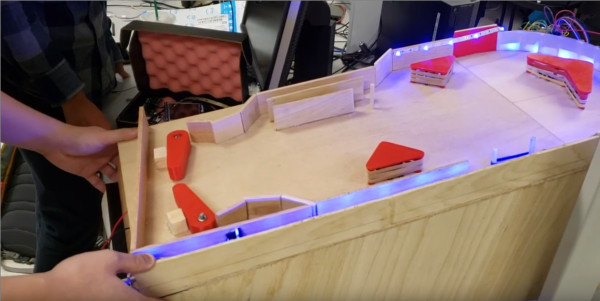

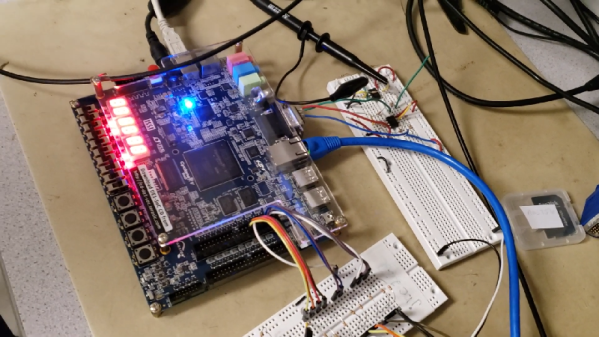

The School of Electrical and Computer Engineering at Cornell University has made [Bruce Land]’s lectures and materials for the Designing with Microcontrollers (ECE 4760) course available for many years. But recently [Bruce], who semi-retired in 2020, and the new lecturer [Hunter Adams] have reworked the course and labs to use the Raspberry Pi Pico. You can see the introductory lecture of the reworked class below.

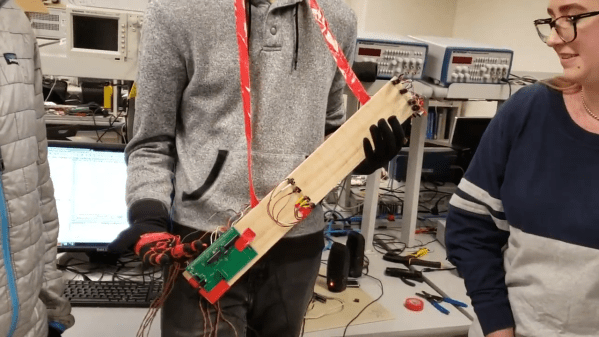

Not only are the videos available online, but the class’s GitHub repository hosts extensive and well-documented examples, lecture notes, and helpful links. If you want to get started with RP2040 programming, or just want to dig deeper into a particular technique, this is a great place to start.

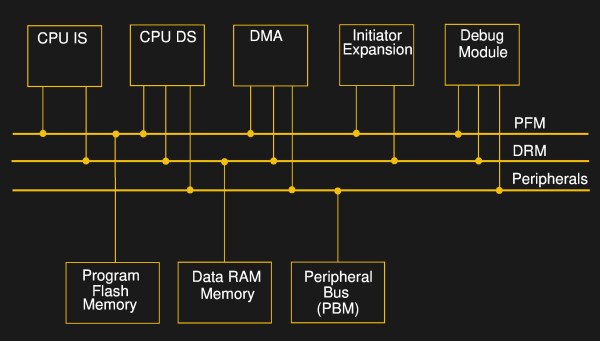

From what we can tell, this is the third overhaul of the class this century. Back in 2012 the course was using the ATmega1284 AVR microcontroller, and in 2015 it switched to the Microstick II using a Microchip PIC32MX. Not only were these lecture series also available free online, but each has been maintained as reference after being replaced. One common thread with all of these platforms is their low cost of entry. Assuming you already have a computer, setting up the hardware and software development environment for these modules costs less than the price of a pizza dinner, a fact no doubt appreciated by the ECE department’s budget director.

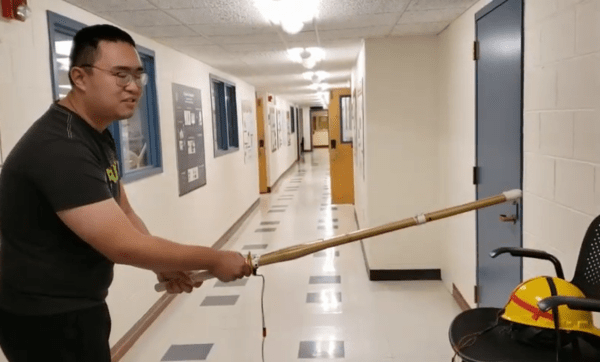

We’ve covered this course before back in 2015 when it first changed. Another free online course on embedded system design is from [Prof James Conrad] at UNC Charlotte, based on the Renasas RX63N microcontroller — the UNC Charlotte team drove development of the autonomous vehicle project we covered back in 2009. If you know of other online embedded systems classes, let us know in the comments below.

Continue reading “Cornell Updates Their MCU Course For The RP2040”