If you’re a retro Nintendo fan you can of course carry a NES and a Game Boy around with you, but the former isn’t very portable. Never fear though, because here’s [Chad Burrow], who’s created a neat handheld console that emulates both.

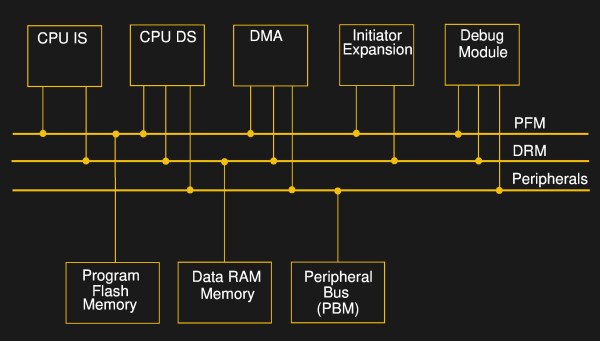

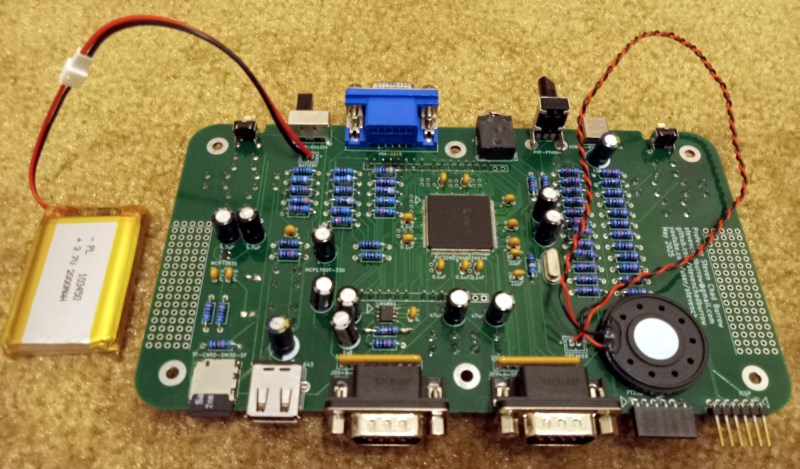

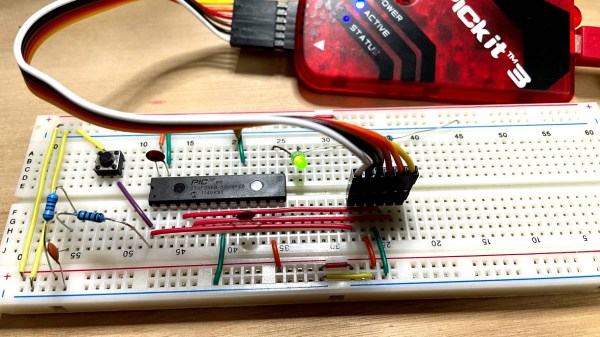

It’s called the Acolyte Handheld, and it sports the slightly unusual choice for these parts of a PIC32 as its main processor. Unexpectedly it can use Sega Genesis controllers, but it has the usual buttons on board for portable use. It can drive either its own LCD or an external VGA monitor, and in a particularly nice touch, it switches between the two seamlessly. The NES emulator is his own work, while Game Boy support comes courtesy of Peanut-GB.

We like the design of the case, and particularly that of the buttons. Could it have been made smaller by forgoing some of the through-hole parts in favour of SMD ones? Quite likely, but though it’s chunky it’s certainly not outsized.

Portable Nintendo-inspired hardware is popular around here, as you can see with this previous handheld NES

[Harry Gill] has you covered with

[Harry Gill] has you covered with