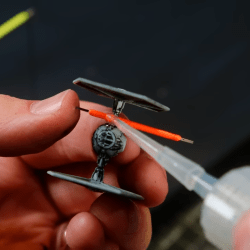

[Boylei] shows that those little LED filament strips make great freeze-frame blaster shots in a space battle diorama. That’s neat and all, but what we really want to highlight is a simple tip [Boylei] shares about working with these filament strips: how to glue them.

The silicone (or silicone-like) coating on these LED filament strips means glue simply doesn’t stick. To work around this, [Boylei] puts a piece of clear heat shrink around the filament, and glues to that instead. If you want a visual, you can see him demonstrate at 6:11. It’s a simple and effective tip that’s certainly worth keeping in mind, especially since filament strips invite so many project ideas.

When LED filament strips first hit the hobbyist market they were attractive, but required high operating voltages. Nowadays they are not only cheaper, but work at battery-level voltages and come in a variety of colors.

These filaments have only gotten easier to work with over the years. Just remember to be gentle about bending them, and as [Boylei] demonstrates, a little piece of clear shrink tubing is all it takes to provide a versatile glue anchor. So if you had a project idea involving them that didn’t quite work out in the past, maybe it’s time to give it another go?

Continue reading “A Simple Tip For Gluing Those LED Filaments”