It’s not too often that you see handkerchiefs around anymore. Today, they’re largely viewed as unsanitary and well… just plain gross. You’ll be quite disappointed to learn that they have absolutely nothing to do with this article other than a couple of similarities they share when compared to your neocortex. If you were to pull the neocortex from your brain and stretch it out on a table, you most likely wouldn’t be able to see that not only is it roughly the size of a large handkerchief; it also shares the same thickness.

The neocortex, or cortex for short, is Latin for “new rind”, or “new bark”, and represents the most recent evolutionary change to the mammalian brain. It envelopes the “old brain” and has several ridges and valleys (called sulci and gyri) that formed from evolution’s mostly successful attempt to stuff as much cortex as possible into our skulls. It has taken on the duties of processing sensory inputs and storing memories, and rightfully so. Draw a one millimeter square on your handkerchief cortex, and it would contain around 100,000 neurons. It has been estimated that the typical human cortex contains some 30 billion total neurons. If we make the conservative guess that each neuron has 1,000 synapses, that would put the total synaptic connections in your cortex at 30 trillion — a number so large that it is literally beyond our ability to comprehend. And apparently enough to store all the memories of a lifetime.

In the theater of your mind, imagine a stretched-out handkerchief lying in front of you. It is… you. It contains everything about you. Every memory that you have is in there. Your best friend’s voice, the smell of your favorite food, the song you heard on the radio this morning, that feeling you get when your kids tell you they love you is all in there. Your cortex, that little insignificant looking handkerchief in front of you, is reading this article at this very moment.

What an amazing machine; a machine that is made possible with a special type of cell – a cell we call a neuron. In this article, we’re going to explore how a neuron works from an electrical vantage point. That is, how electrical signals move from neuron to neuron and create who we are.

A Basic Neuron

Despite the amazing feats a human brain performs, the neuron is comparatively simple when observed by itself. Neurons are living cells, however, and have many of the same complexities as other cells – such as a nucleus, mitochondria, ribosomes, and so on. Each one of these cellular parts could be the subject of an entire book. Its simplicity arises from the basic job it does – which is outputting a voltage when the sum of its inputs reaches a certain threshold, which is roughly 55 mV.

Using the image above, let’s examine the three major components of a neuron.

Soma

The soma is the cell body and contains the nucleus and other components of a typical cell. There are different types of neurons whose differing characteristics come from the soma. Its size can range from 4 to over 100 micrometers.

Dendrites

Dendrites protrude from the soma and act as the inputs of the neuron. A typical neuron will have thousands of dendrites, with each connecting to an axon of another neuron. The connection is called a synapse but is not a physical one. There is a gap between the ends of the dendrite and axon called a synaptic cleft. Information is relayed through the gap via neural transmitters, which are chemicals such as dopamine and serotonin.

Axon

Each neuron has only a single axon that extends from the soma, and acts similar to an electrical wire. Each axon will terminate with terminal fibers, forming synapses with as many as 1,000 other neurons. Axons vary in length and can reach a few meters long. The longest axons in the human body run from the bottom of the foot to the spinal cord.

The basic electrical operation of a neuron is to output a voltage spike from its axon when the sum of its input voltages (via its dendrites) crosses a specific threshold. And since axons are connected to dendrites of other neurons, you end up with this vastly complicated neural network.

Since we’re all a bunch of electronic types here, you might be thinking of these ‘voltage spikes’ as a difference of potential. But that’s not how it works. Not in the brain anyway. Let’s take a closer look at how electricity flows from neuron to neuron.

Action Potentials – The Communication Protocol of the Brain

The axon is covered in a myelin sheet which acts as an insulator. There are small breaks in the sheet along the length of the axon which are named after its discoverer, called Nodes of Ranvier. It’s important to note that these nodes are ion channels. In the spaces just outside and inside of the axon membrane exists a concentration of potassium and sodium ions. The ion channels will open and close, creating a local difference in the concentration of sodium and potassium ions.

We all should know that an ion is an atom with a charge. In a resting state, the sodium/potassium ion concentration creates a negative 70 mV difference of potential between the outside and inside of the axon membrane, with there being a higher concentration of sodium ions outside and a higher concentration of potassium ions inside. The soma will create an action potential when -55 mV is reached. When this happens, a sodium ion channel will open. This lets positive sodium ions from outside the axon membrane to leak inside, changing the sodium/potassium ion concentration inside the axon, which in turn changes the difference of potential from -55 mV to around +40 mV. This process in known as depolarization.

One by one, sodium ion channels open along the entire length of the axon. Each one opens only for a short time, and immediately afterward, potassium ion channels open, allowing positive potassium ions to move from inside the axon membrane to the outside. This changes the concentration of sodium/potassium ions and brings the difference of potential back to its resting place of -70 mV in a process known as repolarization. Fro start to finish, the process takes about five milliseconds to complete. The process causes a 110 mV voltage spike to ride down the length of the entire axon, and is called an action potential. This voltage spike will end up in the soma of another neuron. If that particular neuron gets enough of these spikes, it too will create an action potential. This is the basic process of how electrical patterns propagate throughout the cortex.

The mammalian brain, specifically the cortex, is an incredible machine and capable of far more than even our most powerful computers. Understanding how it works will give us a better insight into building intelligent machines. And now that you know the basic electrical properties of a neuron, you’re in a better position to understand artificial neural networks.

Sources

Action Potential in Neurons, via Youtube

On Intelligence, by Jeff Hawkins, ISDN 978-0805078534

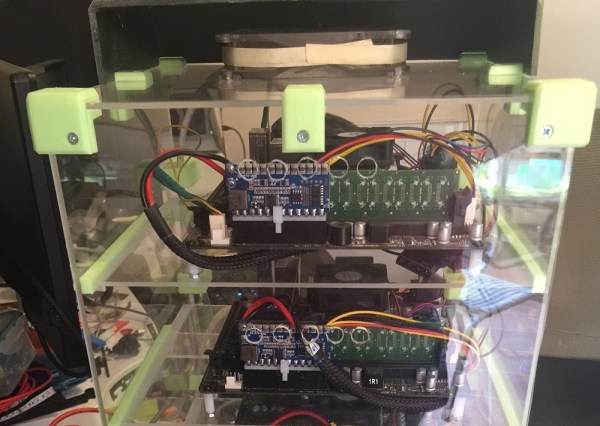

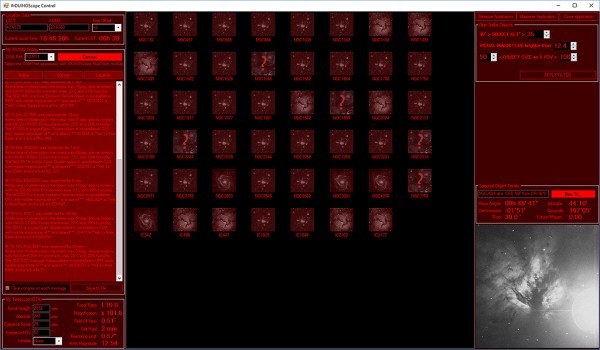

and such systems have been available for decades. They are unfortunately quite expensive. So [Dessislav Gouzgounov] took matters into his own hands and developed the

and such systems have been available for decades. They are unfortunately quite expensive. So [Dessislav Gouzgounov] took matters into his own hands and developed the

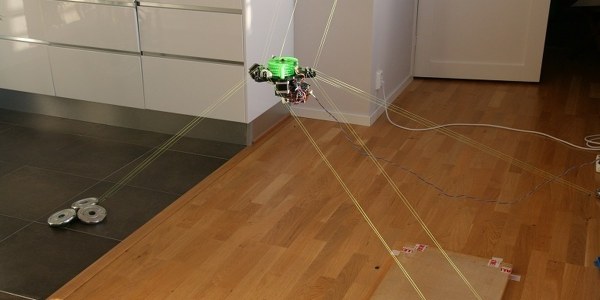

[Torbjørn Ludvigsen] is a physics major out of Umea University in Sweden, and built the Hangprinter for only $250 in parts. It follows the RepRap tradition of being

[Torbjørn Ludvigsen] is a physics major out of Umea University in Sweden, and built the Hangprinter for only $250 in parts. It follows the RepRap tradition of being

display that had decent resolution and 16 levels of grey. But would 16 levels be sufficient to produce an animation that’s pleasing to the eye?

display that had decent resolution and 16 levels of grey. But would 16 levels be sufficient to produce an animation that’s pleasing to the eye?

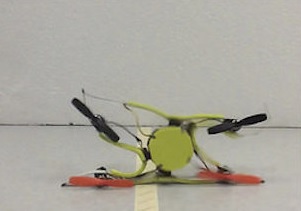

ask yourself while picking up the pieces of you shiny new quad off the ground… “they made these things out of flexible material?”

ask yourself while picking up the pieces of you shiny new quad off the ground… “they made these things out of flexible material?”