The crystal radio is a time-honored build that sadly doesn’t get much traction anymore. Once a rite of passage for electronics hobbyists, the classic coil-on-an-oatmeal-carton and cat’s whisker design just isn’t that easy to pull off anymore, mainly because the BOM isn’t really something that you can just whistle up from DigiKey or Mouser.

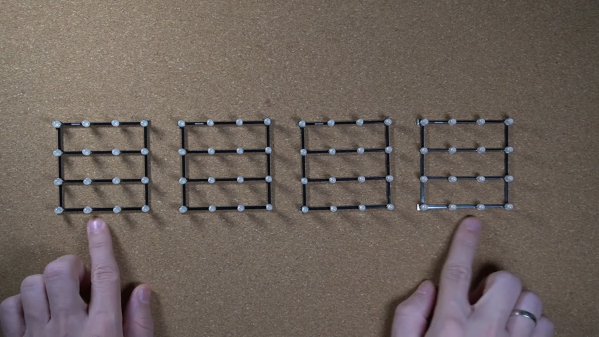

Or is it? To push the crystal radio into the future a bit, [tsbrownie] tried to design a receiver around standard surface-mount inductors, and spoiler alert — it didn’t go so well. His starting point was a design using a hand-wound air-core coil, a germanium diode for a detector, and a variable capacitor that was probably scrapped from an old radio. The coil had three sections, so [tsbrownie] first estimated the inductance of each section and sourced some surface-mount inductors that were as close as possible to their values. This required putting standard value inductors in series and soldering taps into the correct places, but at best the SMD coil was only an approximation of the original air-core coil. Plugging the replacement coil into the crystal radio circuit was unsatisfying, to say the least. Only one AM station was heard, and then only barely. A few tweaks to the SMD coil improved the sensitivity of the receiver a bit, but still only brought in one very local station.

[tsbrownie] chalked up the failure to the lower efficiency of SMD inductors, but we’re not so sure about that. If memory serves, the windings in an SMD inductor are usually wrapped around a core that sits perpendicular to the PCB. If that’s true, then perhaps stacking the inductors rather than connecting them end-to-end would have worked better. We’d try that now if only we had one of those nice old variable caps. Still, hats off to [tsbrownie] for at least giving it a go.

Note: Right after we wrote this, a follow-up video popped up in our feed where [tsbrownie] tried exactly the modification we suggested, and it certainly improves performance, but in a weird way. The video is included below if you want to see the details.

Continue reading “Fail Of The Week: The SMD Crystal Radio That Wasn’t”