Our understanding of the sensory capabilities of animals has a lot of blanks, and often new discoveries serve as inspiration for new technology. Researchers from the University of Leeds and the Royal Veterinary College have found that mosquitos can navigate in complete darkness by detecting the subtle changes in the air flow created when they fly close to obstacles. They then used this knowledge to build a simple but effective sensor for use on drones.

Extremely sensitive receptors at the base of the antennae on mosquitoes’ heads, called the Johnston’s organ, allow them to sense these tiny changes in airflow. Using fluid dynamics simulations based on high speed photography, the researchers found that the largest changes in airflow occur over the mosquito’s head, which means the receptors are in exactly the right place. From their data, scientists predict that mosquitos could possibly detect surfaces at a distance of more than 20 wing lengths. Considering how far 20 arm lengths is for us, that’s pretty impressive. If you can get past the paywall, you can read the full article from the Science journal.

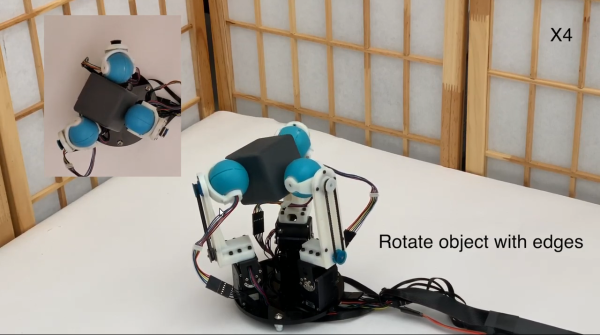

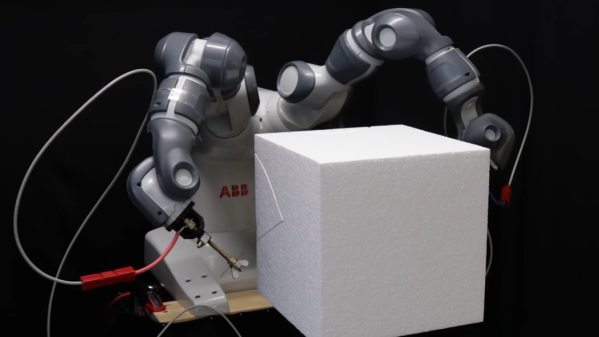

Using their newfound knowledge, the researchers equipped a small drone with probe tubes connected to differential pressure sensors. Using these sensors the drone was able to effectively detect when it got close to the wall or floor, and avoid a collision. The sensors also require very little computational power because it’s only a basic threshold value. Check out the video after the break.

Although this sensing method might not replace ultrasonic or time-of-flight sensors for drones, it does show that there is still a lot we can learn from nature, and that simpler is usually better. We’ve already seen simple insect-inspired navigation for drone swarms, as well as an optical navigation device for humans that works without satellites and only requires a view of the sky. Thanks for the tip [Qes]! Continue reading “Obstacle Avoidance For Drones, Learned From Mosquitoes”