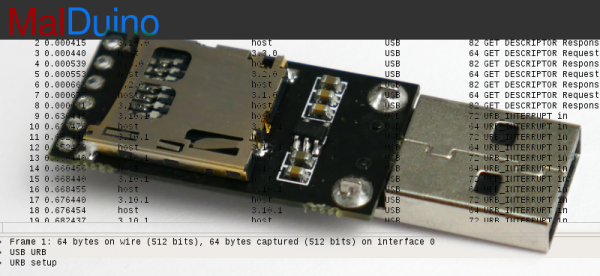

Pick a lock, plug in a WiFi-enabled Raspberry Pi and that’s nearly all there is to it.

There’s more than that of course, but the wind farms that [Jason Staggs] and his fellow researchers at the University of Tulsa had permission to access were — alarmingly — devoid of security measures beyond a padlock or tumbler lock on the turbines’ server closet. Being that wind farms are generally in open fields away from watchful eyes, there is little indeed to deter a would-be attacker.

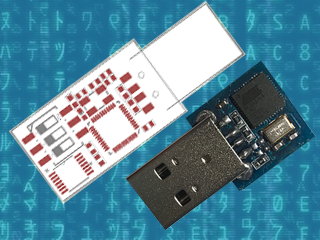

[Staggs] notes that a savvy intruder has the potential to shut down or cause considerable — and expensive — damage to entire farms without alerting their operators, usually needing access to only one turbine to do so. Once they’d entered the turbine’s innards, the team made good on their penetration test by plugging their Pi into the turbine’s programmable automation controller and circumventing the modest network security.

The team are presenting their findings from the five farms they accessed at the Black Hat security conference — manufacturers, company names, locations and etc. withheld for obvious reasons. One hopes that security measures are stepped up in the near future if wind power is to become an integral part of the power grid.

All this talk of hacking and wind reminds us of our favourite wind-powered wanderer: the Strandbeest!

[via WIRED]