Last weekend, DEF CON held their “SAFE MODE” conference: instead of meeting at a physical venue, the entire conference was held online. All the presentations are available on the official DEF CON YouTube channel. We’ll cover a few of the presentations here, and watch out for other articles on HaD with details on the other talks that we found interesting.

Continue reading “This Week In Security: DEF CON, Intel Leaks, Snapdragon, And A Robot Possessed”

Security Hacks1542 Articles

Separation Between WiFi And Bluetooth Broken By The Spectra Co-Existence Attack

This year, at DEF CON 28 DEF CON Safe Mode, security researchers [Jiska Classen] and [Francesco Gringoli] gave a talk about inter-chip privilege escalation using wireless coexistence mechanisms. The title is catchy, sure, but what exactly is this about?

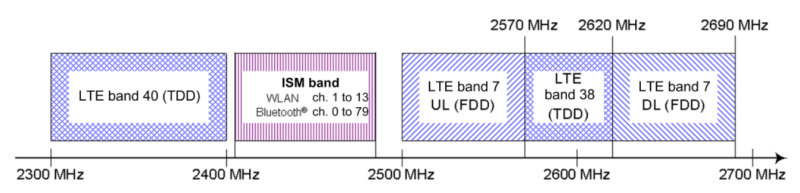

To understand this security flaw, or group of security flaws, we first need to know what wireless coexistence mechanisms are. Modern devices can support cellular and non-cellular wireless communications standards at the same time (LTE, WiFi, Bluetooth). Given the desired miniaturization of our devices, the different subsystems that support these communication technologies must reside in very close physical proximity within the device (in-device coexistence). The resulting high level of reciprocal leakage can at times cause considerable interference.

There are several scenarios where interference can occur, the main ones are:

- Two radio systems occupy neighboring frequencies and carrier leakage occurs

- The harmonics of one transmitter fall on frequencies used by another system

- Two radio systems share the same frequencies

To tackle these kind of problems, manufacturers had to implement strategies so that the devices wireless chips can coexist (sometimes even sharing the same antenna) and reduce interference to a minimum. They are called coexistence mechanisms and enable high-performance communication on intersecting frequency bands and thus, they are essential to any modern mobile device. Despite open solutions exist, such as the Mobile Wireless Standards, the manufacturers usually implement proprietary solutions.

Spectra

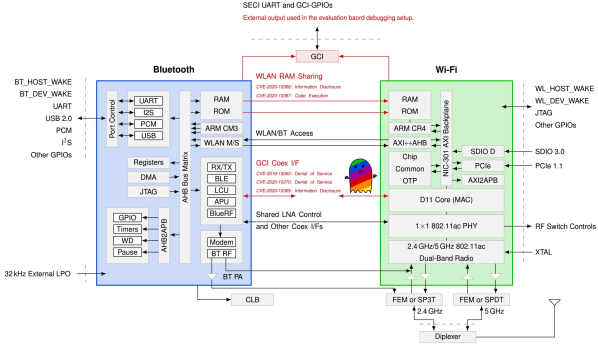

Spectra is a new attack class demonstrated in this DEF CON talk, which is focused on Broadcom and Cypress WiFi/Bluetooth combo chips. On a combo chip, WiFi and Bluetooth run on separate processing cores and coexistence information is directly exchanged between cores using the Serial Enhanced Coexistence Interface (SECI) and does not go through the underlying operating system.

Spectra class attacks exploit flaws in the interfaces between wireless cores in which one core can achieve denial of service (DoS), information disclosure and even code execution on another core. The reasoning here is, from an attacker perspective, to leverage a Bluetooth subsystem remote code execution (RCE) to perform WiFi RCE and maybe even LTE RCE. Keep in mind that this remote code execution is happening in these CPU core subsystems, and so can be completely invisible to the main device CPU and OS.

Join me below where the talk is embedded and where I will also dig into the denial of service, information disclosure, and code execution topics of the Spectra attack.

Continue reading “Separation Between WiFi And Bluetooth Broken By The Spectra Co-Existence Attack”

This Week In Security: Garmin Ransomware, KeePass , And Twitter Warnings

On July 23, multiple services related to Garmin were taken offline, including their call center and aviation related services. Thanks to information leaked by Garmin employees, we know that this multi-day outage was caused by the Wastedlocker ransomware campaign. After four days, Garmin was able to start the process of restoring the services.

It’s reported that the requested ransom was an eye-watering $10 million. It’s suspected that Garmin actually paid the ransom. A leaked decryptor program confirms that they received the decryption key. The attack was apparently very widespread through Garmin’s network, as it seems that both workstations and public facing servers were impacted. Let’s hope Garmin learned their lesson, and are shoring up their security practices. Continue reading “This Week In Security: Garmin Ransomware, KeePass , And Twitter Warnings”

Cryptographic LCDs Use The Magic Of XOR

Digital security is always a moving target, with no one device or system every being truly secure. Whether its cryptographic systems being compromised, software being hacked, or baked-in hardware vulnerabilities, it seems there is always a hole to be found. [Max Justicz] has a taste for such topics, and decided to explore the possibility of creating a secure communications device using a pair of LCDs.

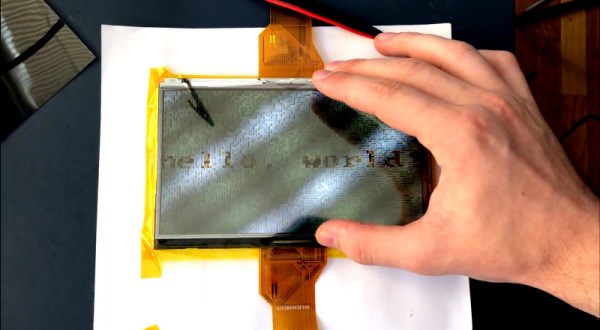

In a traditional communications system, when a message is decrypted and the plaintext is displayed on screen, there’s a possibility that any other software running could capture the screen or memory state, and thus capture the secret data. To get around this, [Max]’s device uses a concept called visual cryptography. Two separate, independent systems with their own LCD each display a particular pattern. It is only when the two displays are combined together with the right filters that the message can be viewed by the user, thanks to the visual XOR effect generated by the polarized nature of LCDs.

The device as shown, working with both transparent OLEDs and traditional LCDs, is merely a proof of concept. [Max] envisions a device wherein each display is independently sourced, such that even if one is compromised, it doesn’t have the full message, and thus can’t compromise the system. [Max] also muses about the problem of side-channel attacks, and other factors to consider when trying to build a truly secure system.

We love a good discussion of cryptography and security around here; [John McMaster]’s talk on crypto ignition keys was a particular hit at Supercon last year. Video after the break.

This Week In Security: Twilio, PongoTV, And BootHole

Twilio, the cloud provider for all things telecom, had an embarrassing security fail a couple weeks ago. The problem was the Amazon S3 bucket that Twilio was using to host part of their public facing content. The bucket was configured for public read-write access. Anyone could use the Amazon S3 API to make changes to the files stored there.

The files in question were protected behind Cloudflare’s CDN, but there’s a catch to Cloudflare’s service. If you know the details of the service behind Cloudflare, it can often be interacted with directly. In many cases, knowing the IP address of the server being protected is enough to totally bypass Cloudflare altogether. In this case, the service behind the CDN is Amazon’s S3. Any changes made to the files there are picked up by the CDN.

Someone discovered the insecure bucket, and modified a Javascript file that is distributed as part of the Twilio JS SDK. That modification was initially described as “non-malicious”, but in the official incident report, Twilio states that the injected code is part of an ongoing magecart campaign carried out against misconfigured S3 buckets.

Continue reading “This Week In Security: Twilio, PongoTV, And BootHole”

Learn Software Reverse Engineering: Ghidra Class Videos From HackadayU Now Available!

The HackadayU video series on learning to use Ghidra is now available!

Ghidra is a tool for reverse engineering software binaries — you may remember that it was released as Open Source by the NSA last year. It does an amazing job of turning compiled binaries that tell the computer how to operate into human-readable C code. The catch is that there’s a learning curve to making the most out of what Ghidra gives you. Enter the Introduction to Reverse Engineering with Ghidra class led by Matthew Alt as part of the HackadayU series. This set of four one-hour virtual classroom videos were just made available so that you can take the course at your own pace.

Matthew has actually been schooling us for a while. He’s also known as [wrongbaud] and we’ve been spending a lot of time covering his reverse engineering projects, including the teardowns of NES-on-a-chip hardware and his excellent hacker’s guide to JTAG. His HackadayU class continues that legacy by pulling together course materials for a high-quality hands-on walk through Ghidra. You’ll get a dose of computer architecture, the compilation process, ELF file structure, and x86_64 instructions sets along the way. He’s done a superb job of making example code for the coursework available.

While this was the first HackadayU course, there are more on the way. Anool Mahidharia just finished teaching KiCAD & FreeCAD 101 and videos will be published a soon as the editing process is complete. The fall lineup of classes is shaping up nicely and will be announced soon. As a sneak peak, we have instructors working on classes covering tiny machine learning, a second set of classes on Ghidra reverse engineering, a protocol deep dive (I2C, SPI, one-wire, JTAG etc.), Linux on Raspberry Pi, building interactive art, and all about LEDs, and an intro to design with Rhino. Keep your eye on Hackaday for more info as classes are added to the schedule.

Polymorphic String Encryption Gives Code Hackers Bad Conniptions

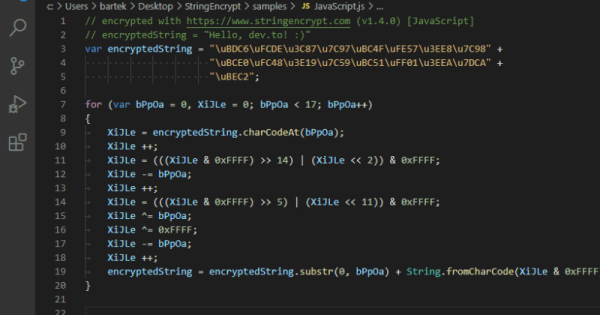

When it comes to cyber security, there’s nothing worse than storing important secret data in plaintext. With even the greenest malicious actors more than capable of loading up a hex editor or decompiler, code can quickly be compromised when proper precautions aren’t taken in the earliest stages of development. To help avoid this, encryption can be used to hide sensitive data from prying eyes. While a simple xor used to be a quick and dirty way to do this, for something really sophisticated, polymorphic encryption is a much better way to go.

A helpful tool to achieve this is StringEncrypt by [PELock]. An extension for Visual Studio Code, it’s capable of encrypting strings and data files in over 10 languages. Using polymorphic encryption techniques, the algorithm used is unique every time, along with the encryption keys themselves. This makes it far more difficult for those reverse engineering a program to decrypt important strings or data.

While the free demo is limited in scope, the price for the full version is quite reasonable, and we expect many out there could find it a useful addition to their development toolkit. We’ve discussed similar techniques before, often used to make harder-to-detect malware.

[Thanks to Dawid for the tip!]