Firmware is caught between hardware and software. What do I mean? Microcontroller designers compete on how many interesting and useful hardware peripherals they can add to the chips, and they are all different on purpose. Meanwhile, software designers want to abstract away from the intricacies and idiosyncrasies of the hardware peripherals, because code wants to be generic and portable. Software and hardware designers are Montagues and Capulets, and we’re caught in the crossfire.

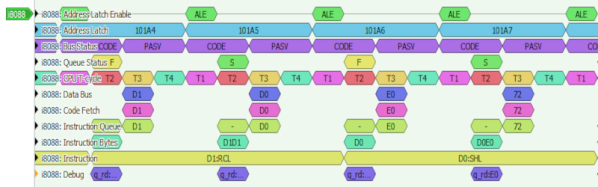

I’m in the middle of a design that takes advantage of perhaps one of the most idiosyncratic microcontroller peripherals out there – the RP2040’s PIOs. Combining these with the chip’s direct memory access (DMA) controllers allows some fairly high-bandwidth processing, without bogging down the CPUs. But because I want this code to be usable and extensible by a wide audience, I’m also trying to write it in MicroPython. And configuring DMA controllers is just too idiosyncratic for MicroPython.

But there’s an escape hatch. In my case, it’s courtesy of the machine.mem32 function, which lets you read and write directly into the chip’s memory, including all of the memory-mapped configuration registers. Sure, it’s absurdly low-level, but it means that anything you read about in the chip’s datasheet, you can do right away, and from within the relative comfort of a Micropython program. Other languages have their PEEK and POKE equivalents as well, or allow inline assembler, or otherwise furnish you the tools to get closer to the metal without having to write all the rest of your code low level.

I’m honestly usually a straight-C or even Forth programmer, but this experience of using a higher-level language and simultaneously being able to dive down to the lowest levels of bit-twiddling at the same time has been a revelation. If you’re just using Micropython, open up your chip’s datasheet and see what it can offer you. Or if you’re programming at the configure-this-register level, check out the extra benefits you can get from a higher-level language. You can have your cake and eat it too!