Any software that accepts user input must take some effort to sanitize incoming data, lest unexpected and unwelcome things happen. Here to make that easier is the Big List of Naughty Strings, an evolving list of edge cases, unusual characters, script-injection fragments, and all-around nonstandard stuff aimed at QA testers, developers, and the curious. It’s a big list that has grown over the years, and every piece of it is still (technically) just a string.

These strings have a high probability of surfacing any problems with handling user input. They won’t necessarily break anything, but they may cause unexpected things to happen and help point out any issues that need fixing. After all, many attacks hinge on being able to send unexpected inputs that don’t get properly sanitized.

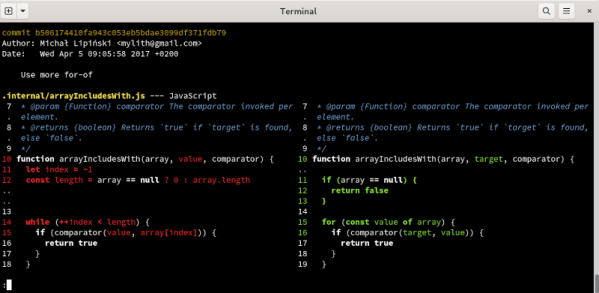

Finding bad inputs is not always entirely straightforward, but at least the Big List of Naughty Strings is available in a variety of formats to make it easy to use. [Max Woolf] has been maintaining the list for years, but if you haven’t heard of it yet and think it might come in useful, now’s the time to give it a look. Now you can help ensure your system can handle things like someone registering a company named ; DROP TABLE “COMPANIES”;– LTD.