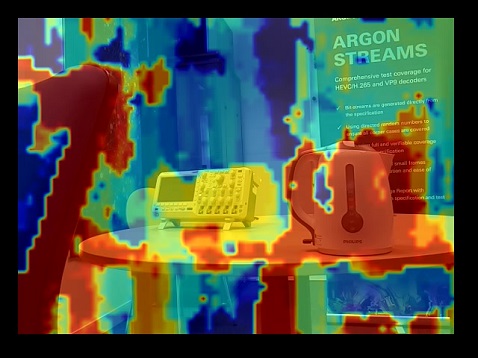

You will never see every episode of Doctor Who, and that’s not because viewing every single episode would require a few months of constant binging. Many of the tapes containing early episodes were destroyed, and of the episodes that still exist, many only live on in tele-recordings, black and white films of the original color broadcast.

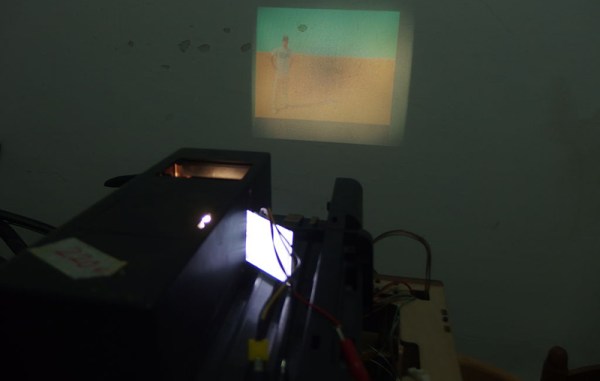

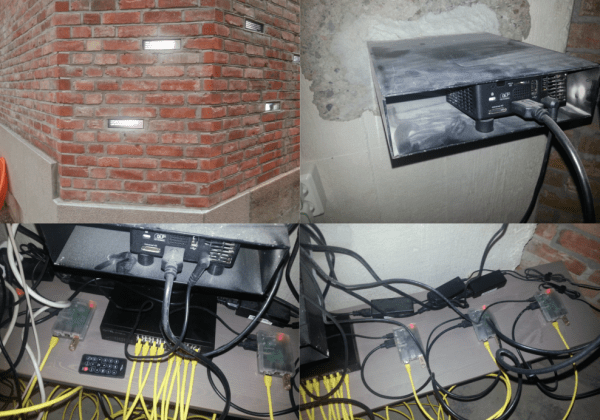

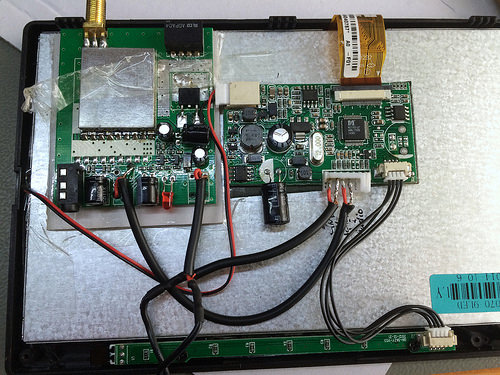

Because of the high-resolution of these tele-recordings, there are remnants of the PAL color-coding signal hidden away. [W.A. Steer] has worked on PAL decoding for several years, and figured if anyone could recover the color from these tele-recordings, he could.

While the sensors in PAL video cameras are RGB, a PAL television signal is encoded as luminance, Y (R+G+B), U (Y-B), and V (Y-R). The Y is just the black and white picture, and U and V encode the amplitude of two subcarrier signals. These signals are 90 degrees out of phase with each other (thus Phase Alternating Line), and displaying them on a black and white screen reveals a fine pattern of ‘chromadots’ that can be used to extract the color.