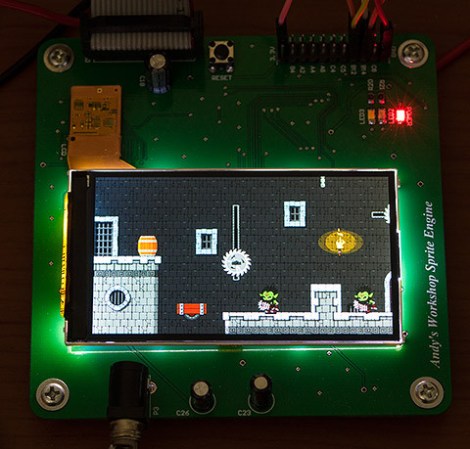

Graphics accelerators move operations to hardware, where they can be executed much faster. This is what allows your Raspberry Pi to display high definition video decently. [Andy]’s latest build is a 2D sprite engine, featuring hardware accelerated graphics on an FPGA.

In the simplest mode, the sprite engine just passes commands through to the LCD. This allows for basic control. The fun part sprite mode, which allows for sprites to be loaded onto the FPGA. At that point, you can show, hide, and move the sprite. By overlapping many sprites, you something like the demo shown above.

The FPGA is from Xilinx, and uses their Block RAM IP to store the state of the sprites. The actual sprite data is contained on a 128 Mb external flash chip, since they require significant space.

The game logic runs on a STM32 Cortex M4 microcontroller which communicates with the FPGA and orders the sprites around. The FPGA then deals with generating frames and sending them to the LCD screen, freeing up the microcontroller.

If you’re wondering about the LCD itself, it’s 3.2″, 640 x 360, and taken from a Ericsson U5 Vivaz cellphone. [Andy] has a detailed writeup on reverse engineering it. After the break, he gives us a video overview of the whole system.

Continue reading “Sprite Graphics Accelerator On An FPGA” →