We’re in the last few weeks for entries in the 2016 Hackaday Prize — specifically the challenge is to show off your take on assisstive technology. This is a hugely broad category and I’ve been thinking about it for a while. I’m sure there’s a ton of low-hanging fruit that’s not obvious to everyone. This would be a great time to hit up the comments below and leave your “hey, I always thought someone should make…” ideas. I’m looking forward to reading them and it might just inspire someone to spend the next couple weeks hammering out a prototype to enter.

For me, it’s medication. I knew this can be a challenging problem having gone through a few cycles of prescription medicines in my life. But recently I helped out a family member who was suddenly on many medications taken on eight different times a day — including once, twice, three, and six times per day. This was further compounded by sleep deprivation (having to set alarms at night to take the medicine) and drowsy/woozy effects from the medicine. I can tell you first hand that this is really tough for anyone to deal with and it’s incredibly easy to make a mistake or not be able to remember if you took a dose.

Pill Organizers Do No More or Less

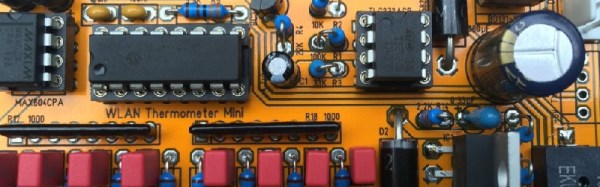

We’ve seen a number of pill organizers before and that’s what I reached for in this case. However, that organizer only had four slots for each day. I didn’t hack it (other than writing on the doors with a Sharpie for when to take each) but even if there were added buttons or LEDs I’m not convinced this would be a marked improvement.

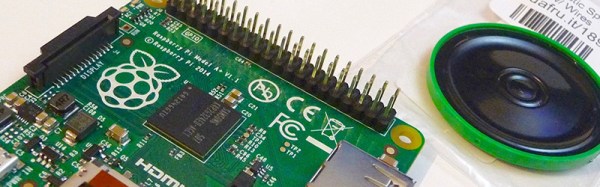

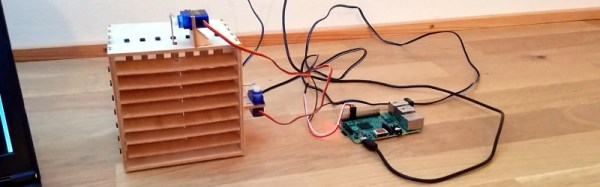

What you see above is my proposal for the medicine problem. Smartphones have become ubiquitous and the processing power and cameras of even budget phones are mind blowing. I think it is entirely possible to write an app that uses computer vision to recognize pills and sync them with the schedule. This may mean whipping the phone out of your pocket, or designing a pill box that has a phone stand next to it (saying that makes me think of using RPi and a Pi camera). Grab your pills and validate them under the camera.

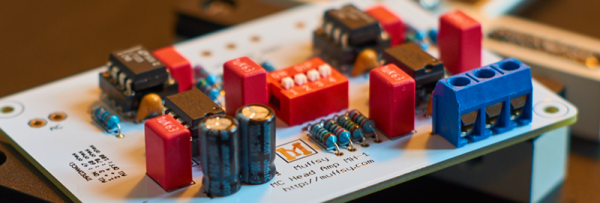

Useful Augmented Reality

The screen of the phone would use augmented reality to overlay information about the pills it sees — you know, like Pokemon Go but in a way that enriches your life. ‘pills, catch ’em all!’ — new pills can be learned of the fly, delivering the user to a screen to identify the pill and the dosing schedule. Taking the validation picture will record when the medicine was taken, and the natural extension of this systems is a pharmacy’s ability to push your dose schedule to your account when you pick up the prescription. A stretch goal would be keeping an eye out for interactions.

This is all very much like how hospitals do it — they’re scanning bar codes on the packaging and the patient bracelet and recording it. This would be an easier user experience and quite frankly I think companies already in this space (like Snapchat and Niantic) could whip this up in a single-day hackathon no problem.

Is it the perfect system? Maybe not. But there is no perfect system or we’d be using it by now. We need you, the world’s talent pool, to step up and make life a little better. Do it in prototype form by October 3rd and you’ll be eligible for one of twenty $1000 cash prizes and a chance at winning the Hackaday Prize. But even if you don’t build a single thing, one idea could be the spark that lets others change the world for the better. So let’s hear it!