For a while now part of my email signature has been a quote from a Hackaday commenter insinuating that an article I wrote was created by a “Dumb AI”. You have my sincerest promise that I am a humble meatbag scribe just like the rest of you, indeed one currently nursing a sore shoulder due to a sporting injury, so I found the comment funny in a way its writer probably didn’t intend. Like many in tech, I maintain a skepticism about the future role of large-language-model generative AI, and have resisted the urge to drink the Kool-Aid you will see liberally flowing at the moment.

Hackaday Is Part Of The Machine

As you’ll no doubt be aware, these large language models work by gathering a vast corpus of text, and doing their computational tricks to generate their output by inferring from that data. They can thus create an artwork in the style of a painter who receives no reward for the image, or a book in the voice of an author who may be struggling to make ends meet. From the viewpoint of content creators and intellectual property owners, it’s theft on a grand scale, and you’ll find plenty of legal battles seeking to establish the boundaries of the field.

As you’ll no doubt be aware, these large language models work by gathering a vast corpus of text, and doing their computational tricks to generate their output by inferring from that data. They can thus create an artwork in the style of a painter who receives no reward for the image, or a book in the voice of an author who may be struggling to make ends meet. From the viewpoint of content creators and intellectual property owners, it’s theft on a grand scale, and you’ll find plenty of legal battles seeking to establish the boundaries of the field.

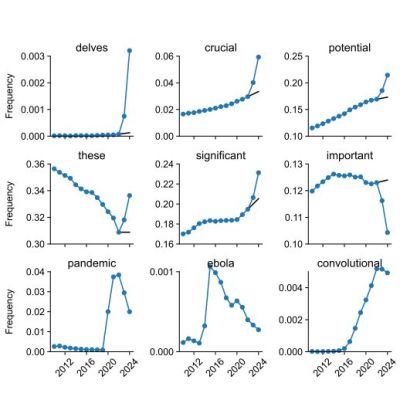

Anyway, once an LLM has enough text from a particular source, it can do a pretty good job of writing in that style. ChatGPT for example has doubtless crawled the whole of Hackaday, and since I’ve written thousands of articles in my nearly a decade here, it’s got a significant corpus of my work. Could it write in my style? As it turns out, yes it can, but not exactly. I set out to test its forging skill. Continue reading “ChatGPT & Me. ChatGPT Is Me!”