A little while ago Oasis was showcased on social media, billing itself as the world’s first playable “AI video game” that responds to complex user input in real-time. Code is available on GitHub for a down-scaled local version if you’d like to take a look. There’s a bit more detail and background in the accompanying project write-up, which talks about both the potential as well as the numerous limitations.

We suspect the focus on supporting complex user input (such as mouse look and an item inventory) is what the creators feel distinguishes it meaningfully from AI-generated DOOM. The latter was a concept that demonstrated AI image generators could (kinda) function as real-time game engines.

We suspect the focus on supporting complex user input (such as mouse look and an item inventory) is what the creators feel distinguishes it meaningfully from AI-generated DOOM. The latter was a concept that demonstrated AI image generators could (kinda) function as real-time game engines.

Image generators are, in a sense, prediction machines. The idea is that by providing a trained model with a short history of what just happened plus the user’s input as context, it can generate a pretty usable prediction of what should happen next, and do it quickly enough to be interactive. Run that in a loop, and you get some pretty impressive clips to put on social media.

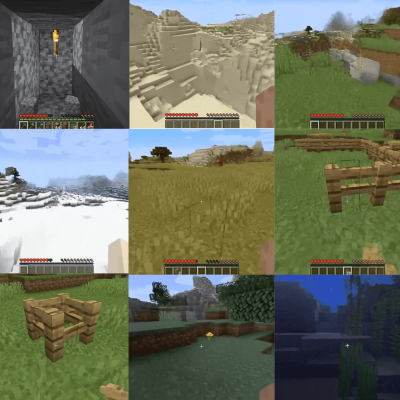

It is a neat idea, and we certainly applaud the creativity of bending an image generator to this kind of application, but we can’t help but really notice the limitations. Sit and stare at something, or walk through dark or repetitive areas, and the system loses its grip and things rapidly go in a downward spiral we can only describe as “dreamily broken”.

It may be more a demonstration of a concept than a properly functioning game, but it’s still a very clever way to leverage image generation technology. Although, if you’d prefer AI to keep the game itself untouched take a look at neural networks trained to use the DOOM level creator tools.