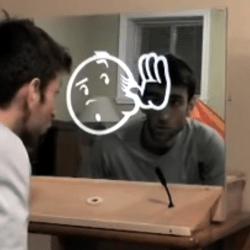

In today’s “futuristic tech you can get for $5”, [RealCorebb] shows us a gesture sensor, one of the sci-fi kind. He was doing a desktop clock build, and wanted to add gesture control to it – without any holes that a typical optical sensor needs. After some searching, he’s found Microchip’s MGC3130, a gesture sensing chip that works with “E-fields”, more precise than the usual ones, almost as cheap, and with a lovely twist.

The coolest part about this chip is that it needs no case openings. The 3130 can work even behind obstructions like a 3D-printed case. You do need a PCB the size of a laptop touchpad, however — unlike the optical sensors easy to find from the usual online marketplaces. Still, if you have a spot, this is a perfect gesture-sensing solution. [RealCorebb] shows it off to us in the demo video.

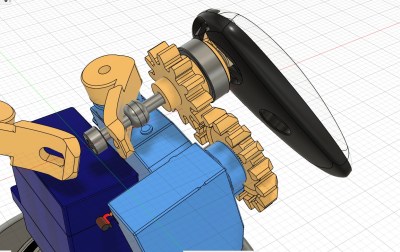

This PCB design is available as gerbers+bom+schematic PDF. You can still order one from the files in the repo. Also, you need to use Microchip’s tools to program your preferred gestures into the chip. Still, it pays off, thanks to the chip’s reasonably low price and on-chip gesture processing. And, [RealCorebb] provides all the explanations you could need, has Arduino examples for us, links all the software, and even provides some Python scripts! Touch-sensitive technology has been getting more and more steam in hacker circles – for instance, check out this open-source 3D-printed trackpad.

Continue reading “This Gesture Sensor Is Precise, Cheap, Well-Hidden”