Another week, another exploit against an air-gapped computer. And this time, the attack is particularly clever and pernicious: turning a GPU into a radio transmitter.

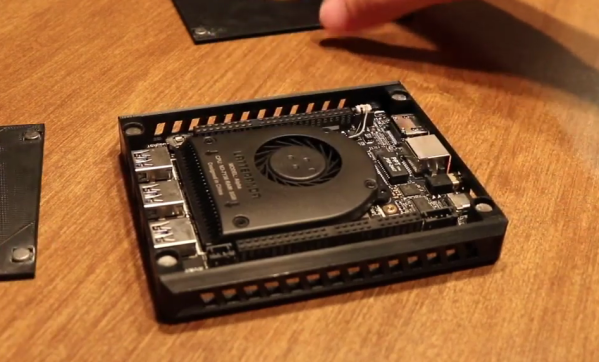

The first part of [Mikhail Davidov] and [Baron Oldenburg]’s article is a review of some of the basics of exploring the RF emissions of computers using software-defined radio (SDR) dongles. Most readers can safely skip ahead a bit to section 9, which gets into the process they used to sniff for potentially compromising RF leaks from an air-gapped test computer. After finding a few weak signals in the gigahertz range and dismissing them as attack vectors due to their limited penetration potential, they settled in on the GPU card, a Radeon Pro WX3100, and specifically on the power management features of its ATI chipset.

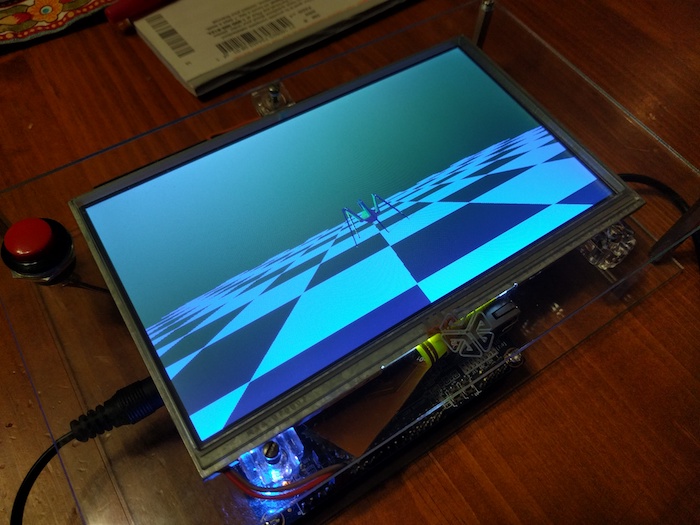

With a GPU benchmarking program running, they switched the graphics card shader clock between its two lowest power settings, which produced a strong signal on the SDR waterfall at 428 MHz. They were able to receive this signal up to 50 feet (15 meters) away, perhaps to the annoyance of nearby hams as this is plunk in the middle of the 70-cm band. This is theoretically enough to exfiltrate data, but at a painfully low bitrate. So they improved the exploit by forcing the CPU driver to vary the shader clock frequency in one megahertz steps, allowing them to implement higher throughput encoding schemes. You can hear the change in signal caused by different graphics being displayed in the video below; one doesn’t need much imagination to see how malware could leverage this to exfiltrate pretty much anything on the computer.

It’s a fascinating hack, and hats off to [Davidov] and [Oldenburg] for revealing this weakness. We’ll have to throw this on the pile with all the other side-channel attacks [Samy Kamkar] covered in his 2019 Supercon talk.

Continue reading “GPU Turned Into Radio Transmitter To Defeat Air-Gapped PC”