When it was first announced, many people were skeptical of the Nintendo DS. Rather than pushing raw power, the unique dual screen handheld was designed to explore new styles of play. Compared to the more traditional handhelds like the Game Boy Advance (GBA) or even Sony’s PlayStation Portable (PSP), the DS seemed like huge gamble for the Japanese gaming giant.

But it paid off. The Nintendo DS ended up being one of the most successful gaming platforms of all time, and as [Modern Vintage Gamer] explains in a recent video, at least part of that was due to its surprising graphical prowess. While it was technically inferior to the PSP in almost every way, Nintendo’s decades of experience in pushing the limits of 2D graphics allowed them to squeeze more out of the hardware than many would have thought possible.

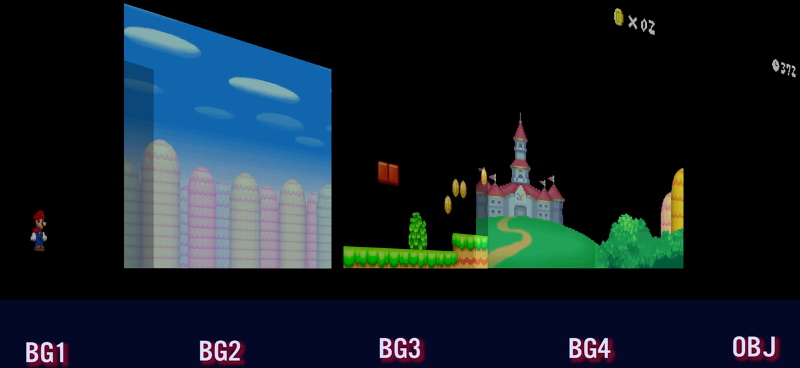

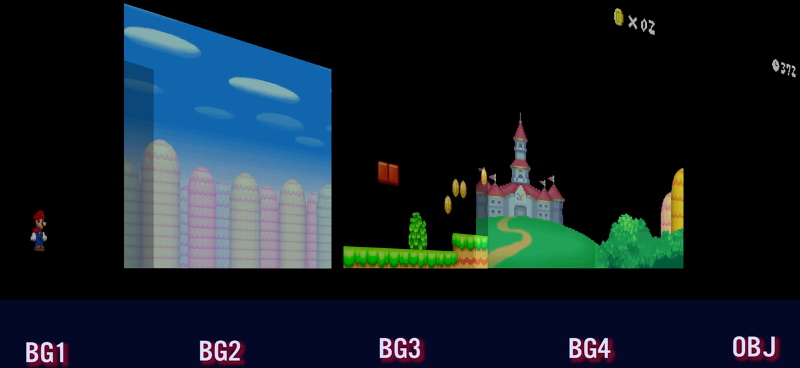

On one level, the Nintendo DS could be seen as a upgraded GBA. Developers who were already used to the 2D capabilities of that system would feel right at home when they made the switch to the DS. As with previous 2D consoles, the DS had several screen modes complete with hardware-accelerated support for moving, scaling, rotating, and reflecting up to four background layers. This made it easy and computationally efficient to pull off pseudo-3D effects such as having multiple backdrop images scrolling by at different speeds to convey a sense of depth.

On top of its GBA-inherited tile and sprite 2D engine, the DS also featured a rudimentary GPU responsible for handling 3D geometry and rendering. Hardware accelerated 3D could only used on one screen at a time, which meant most games would keep the closeup view of the action on one display, and used the second panel to show 2D imagery such as an overhead map. But developers did have the option of flipping between the displays on each frame to render 3D on both panels at a reduced frame rate. The hardware can also handle shadows and included integrated support for cell shading, which was a particularly popular graphical effect at the time.

By combining the 2D and 3D hardware capabilities of the Nintendo DS onto a single screen, developers could produce complex graphical effects. [Modern Vintage Gamer] uses the example of New Super Mario Bros, which places a detailed 3D model of Mario over several layers of moving 2D bitmaps. Ultimately the 3D capabilities of the DS were hindered by the limited resolution of its 256 x 192 LCD panels; but considering most people were still using flip phones when the DS came out, it was impressive for the time.

Compared to the Game Boy Advance, or even the original “brick” Game Boy, it doesn’t seem like hackers have had much luck coming up with ways to exploiting the capabilities of the Nintendo DS. But perhaps with more detailed retrospectives like this, the community will be inspired to take another look at this unique entry in gaming history.

Continue reading “A Look At How Nintendo Mastered Dual Screens” →