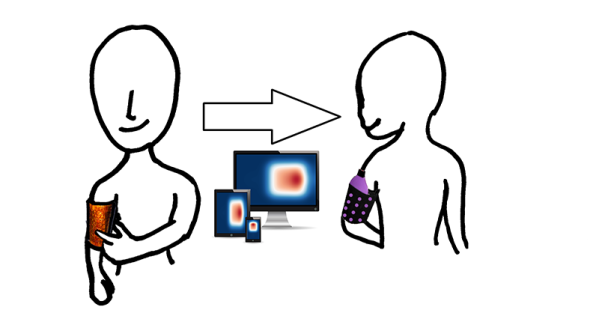

Some of us might never know the touch of another human, but this project in the Hackaday Prize might just be the solution. It’s TouchYou, [Leonardo]’s idea for a wearable device that allows anyone to send tactile and multi-sensorial stimulation across the Internet. It’s touching someone over the Internet, and yes, this technology is right here today.

Inside the TouchYou is an Arduino Pro Mini connected to a Bluetooth module. This Arduino communicates with force sensors and touch sensors and also has an output for a small vibration motor. With that Bluetooth module, the TouchYou becomes an Internet of Things thing, capable of communicating to other TouchYous across the world. It’s an interconnected, worldwide touching experience, and one of the best examples of Human-Computer Interaction we’ve ever seen.

A project like this demands large touch sensors, and if you’re not aware, these are slightly expensive. That’s okay, because [Leonardo] came up with a way to create large flexible touch sensors cheaply. The process begins much like how you would make a PCB at home — print off two sides of a design in a laser printer, then wrap it around a copper foil and Kapton laminate. From there, it’s just a little bit of etching in ferric chloride and carefully soldering the flex PCB connections to fine wires.

From a great idea to some rather impressive work in building DIY flex PCBs, this is one of the better projects in the Hackaday Prize and was named as a finalist in the Human-Computer Interface Challenge.

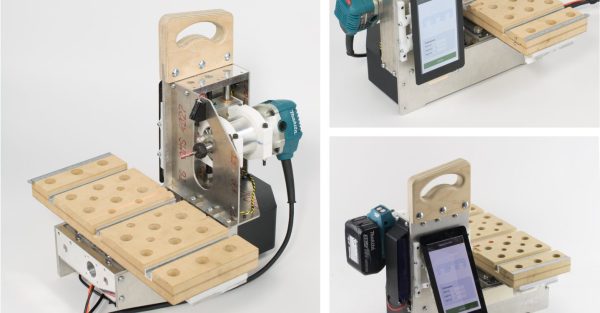

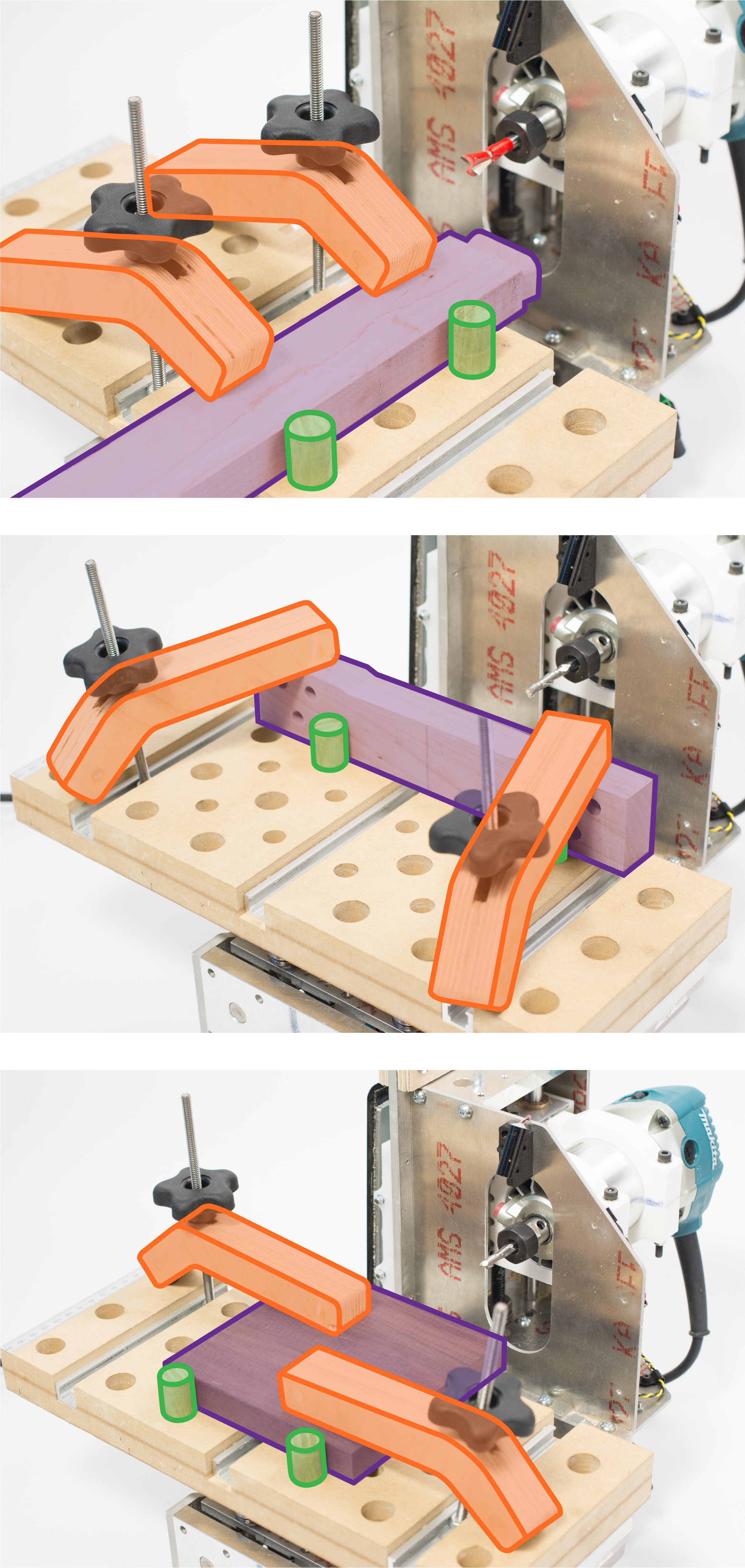

The full MatchSticks creation flow goes like this:

The full MatchSticks creation flow goes like this:

A Human-Computer Interface (or HCI) is what we use to control computers and what they use to

A Human-Computer Interface (or HCI) is what we use to control computers and what they use to