How many times do you have to forget your keys before you start hacking on the problem? For [Binh], the answer was 5 in the last month, and his hack was to make a gesture-based door unlocker. Which leads to the amusing image of [Binh] in a hallway throwing gang signs until he is let in.

The system itself is fairly simple in its execution: the existing deadbolt is actuated by a NEMA 17 stepper turning a 3D printed bevel gear. It runs 50 steps to lock or unlock, apparently, then the motor turns off, so it’s power-efficient and won’t burn down [Binh]’s room.

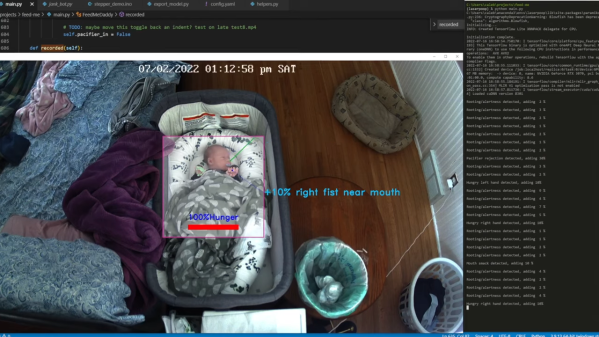

The software is equally simple; mediapipe is an ML library that can already do finger detection and be accessed via Python. Apparently gesture recognition is fairly unreliable, so [Binh] just has it counting the number of fingers flashed right now. In this case, it’s running on a Rasberry Pi 5 with a webcam for image input. The Pi connects via USB serial to an ESP32 that is connected to the stepper driver. [Binh] had another project ready to be taken apart that had the ESP32/stepper combo ready to go so this was the quickest option. As was mounting everything with double-sided tape, but that also plays into a design constraint: it’s not [Binh]’s door.

[Binh] is staying in a Hacker Hotel, and as you might imagine, there’s been more penetration testing on this than you might get elsewhere. It turns out it’s relatively straightforward to brute force (as you might expect, given it is only counting fingers), so [Binh] is planning on implementing some kind of 2FA. Perhaps a secret knock? Of course he could use his phone, but what’s the fun in that?

Whatever the second factor is, hopefully it’s something that cannot be forgotten in the room. If this project tickles your fancy, it’s open source on GitHub, and you can check it out in action and the build process in the video embedded below.

After offering thanks to [Binh] for the tip, the remaining words of this article will be spent requesting that you, the brilliant and learned hackaday audience, provide us with additional tips.

Continue reading “Hack Swaps Keys For Gang Signs, Everyone Gets In”