The lights dim and the music swells as an elite competitor in a silk robe passes through a cheering crowd to take the ring. It’s a blueprint familiar to boxing, only this pugilist won’t be throwing punches.

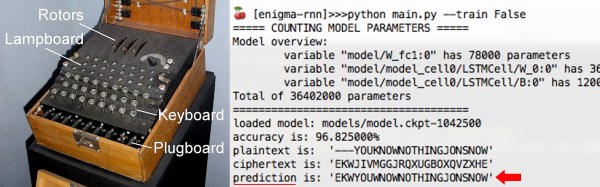

OpenAI created an AI bot that has beaten the best players in the world at this year’s International championship. The International is an esports competition held annually for Dota 2, one of the most competitive multiplayer online battle arena (MOBA) games.

Each match of the International consists of two 5-player teams competing against each other for 35-45 minutes. In layman’s terms, it is an online version of capture the flag. While the premise may sound simple, it is actually one of the most complicated and detailed competitive games out there. The top teams are required to practice together daily, but this level of play is nothing new to them. To reach a professional level, individual players would practice obscenely late, go to sleep, and then repeat the process. For years. So how long did the AI bot have to prepare for this competition compared to these seasoned pros? A couple of months.

Continue reading “Artificial Intelligence At The Top Of A Professional Sport”