Shadowed tool storage — where a tool outline shows at a glance what’s missing from storage — is a really smart way to keep your shop neat. They’re also super important for cases where a tool left behind could be a tragedy. Think, where’s-that-10-mm-socket-while-working-on-a-jet-engine? important. (It’s always the 10-mm socket.)

But just because shadow boards are smart, doesn’t mean they’re easy to make. That’s why [Scott Prince] came up with this semi-automated method for making toolbox shadow boards. The job of tracing around each tool on some sort of suitable material and cutting out the shapes seems straightforward, but the trick comes in organizing the outlines given the space available and the particular collection of tools.

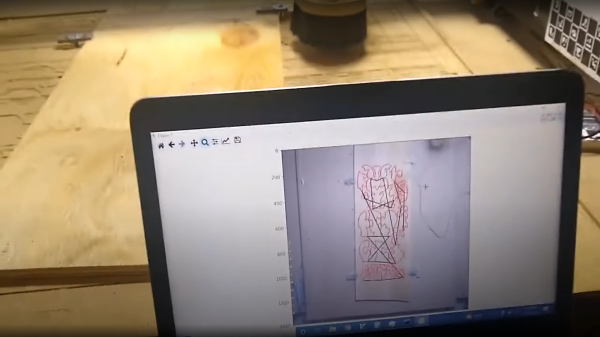

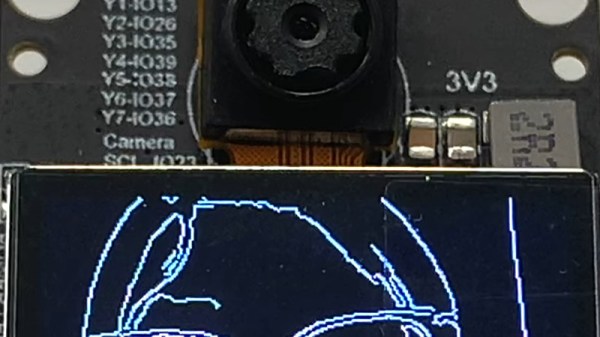

[Scott]’s method starts with capturing images of each individual tool. He used a PiCam and a lightbox housed, strangely enough, in a storage bench; we’d love to hear the full story behind that, but pretty much any digital camera would do for the job. After compensating for distortion with OpenCV, cropping the images, and turning the image into a vector outline of the tool, [Scott] was left with the task of putting the tools into logical groups and laying them out sensibly. After tweaking the tool outlines and adding finger cutouts for easy pickup, [Scott] put his CNC router to work. He chose to use a high-density polyethylene product made by his employer, which looks fantastic, but MDF would work fine too.

We have to admit to a fair degree of toolbox envy now that we’ve seen what shadow boards can do. We’re a bit torn, though — [Zach Friedman]’s Gridfinity storage system has a lot going for it, too.

Finding himself in such a boat, [Fletcher]’s solution was to build

Finding himself in such a boat, [Fletcher]’s solution was to build