Quadruped robots are everywhere now that companies like Boston Dynamics are shipping smaller models in big numbers. [Dave’s Armoury] had one such robot, and wanted to give it a Pokemon Halloween costume. Thus, the robot dog got a Jolteon costume that truly looks fantastic. (Video, embedded below.)

The robot in question is a Unitree Go1, which [Dave] had on loan from InDro robotics. Thus, the costume couldn’t damage or majorly alter the robot in any way. Jolteon was chosen from the original 150 Pokemon as it had the right proportions to suit the robot, and its electric theme fitted [Dave’s] YouTube channel.

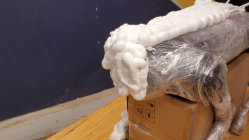

A 3D model of Jolteon was sourced online and modified to create a printable head for the robot application. Two 3D printers and 200 hours of printing time later, and [Dave] had all the parts he needed. Plenty of CA glue was used to join all the parts together with some finishing required to make sure seams and edges didn’t spoil the finish too much. Wood filler and spray paint were used to get the costume looking just like the real Pokemon. Continue reading “Robot Gets A Life-Sized Pokemon Costume For Halloween”