George [Geohot] Hotz has thrown in the towel on his “comma one” self-driving car project. According to [Geohot]’s Twitter stream, the reason is a letter from the US National Highway Traffic Safety Administration (NHTSA), which sent him what basically amounts to a warning to not release self-driving software that might endanger people’s lives.

This comes a week after a post on comma.ai’s blog changed focus from a “self-driving car” to an “advanced driver assistance system”, presumably to get around legal requirements. Apparently, that wasn’t good enough for the NHTSA.

When Robot Cars Kill, Who Gets Sued?

On one hand, we’re sorry to see the system go out like that. The idea of a quick-and-dirty, affordable, crowdsourced driving aid speaks to our hacker heart. But on the other, especially in light of the recent Tesla crash, we’re probably a little bit glad to not have these things on the road. They were not (yet) rigorously tested, and were originally oversold in their capabilities, as last week’s change of focus demonstrated.

On one hand, we’re sorry to see the system go out like that. The idea of a quick-and-dirty, affordable, crowdsourced driving aid speaks to our hacker heart. But on the other, especially in light of the recent Tesla crash, we’re probably a little bit glad to not have these things on the road. They were not (yet) rigorously tested, and were originally oversold in their capabilities, as last week’s change of focus demonstrated.

Comma.ai’s downgrade to driver-assistance system really begs the Tesla question. Their autopilot is also just an “assistance” system, and the driver is supposed to retain full control of the car at all times. But we all know that it’s good enough that people, famously, let the car take over. And in one case, this has led to death.

Right now, Tesla is hiding behind the same fiction that the NHTSA didn’t buy with comma.ai: that an autopilot add-on won’t lull the driver into overconfidence. The deadly Tesla accident proved how that flimsy that fiction is. And so far, there’s only been one person injured by Tesla’s tech, and his family hasn’t sued. But we wouldn’t be willing to place bets against a jury concluding that Tesla’s marketing of the “autopilot” didn’t contribute to the accident. (We’re hackers, not lawyers.)

Should We Take a Step Back? Or a Leap Forward?

Stepping away from the law, is making people inattentive at the wheel, with a legal wink-and-a-nod that you’re not doing so, morally acceptable? When many states and countries will ban talking on a cell phone in the car, how is it legal to market a device that facilitates taking your hands off the steering wheel entirely? Or is this not all that much different from cruise control?

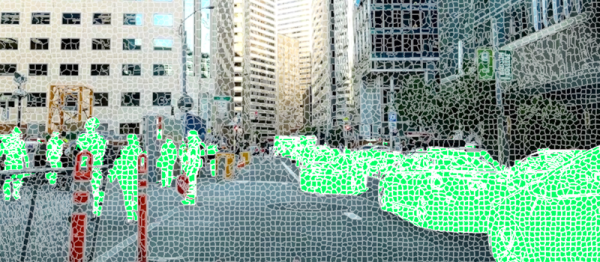

What Tesla is doing, and [Geohot] was proposing, puts a beta version of a driverless car on the road. On one hand, that’s absolutely what’s needed to push the technology forward. If you’re trying to train a neural network to drive, more data, under all sorts of conditions, is exactly what you need. Tesla uses this data to assess and improve its system all the time. Shutting them down would certainly set back the progress toward actually driverless cars. But is it fair to use the general public as opt-in Guinea pigs for their testing? And how fair is it for the NHTSA to discourage other companies from entering the field?

We’re at a very awkward adolescence of driverless car technology. And like our own adolescence, when we’re through it, it’s going to appear a miracle that we survived some of the stunts we pulled. But the metaphor breaks down with driverless cars — we can also simply wait until the systems are proven safe enough to take full control before we allow them on the streets. The current halfway state, where an autopilot system may lull the driver into a false sense of security, strikes me as particularly dangerous.

So how do we go forward? Do we let every small startup that wants to build a driverless car participate, in the hope that it gets us through the adolescent phase faster? Or do we clamp down on innovation, only letting the technology on the road once it’s proven to be safe? We’d love to hear your arguments in the comment section.