Good news this week from the Sun’s far side as the Parker Solar Probe checked in after its speedrun through our star’s corona. Parker became the fastest human-made object ever — aside from the manhole cover, of course — as it fell into the Sun’s gravity well on Christmas Eve to pass within 6.1 million kilometers of the surface, in an attempt to study the extremely dynamic environment of the solar atmosphere. Similar to how manned spacecraft returning to Earth are blacked out from radio communications, the plasma soup Parker flew through meant everything it would do during the pass had to be autonomous, and we wouldn’t know how it went until the probe cleared the high-energy zone. The probe pinged Earth with a quick “I’m OK” message on December 26, and checked in with the Deep Space Network as scheduled on January 1, dumping telemetry data that indicated the spacecraft not only survived its brush with the corona but that every instrument performed as expected during the pass. The scientific data from the instruments won’t be downloaded until the probe is in a little better position, and then Parker will get to do the whole thing again twice more in 2025. Continue reading “Hackaday Links: January 5, 2025”

siri25 Articles

Smart Assistants Need To Get Smarter

Science fiction has regularly portrayed smart computer assistants in a fanciful way. HAL from 2001: A Space Odyssey and J.A.R.V.I.S. from the contemporary Iron Man films are both great examples. They’re erudite, wise, and capable of doing just about any reasonable task that is asked of them, short of opening the pod bay doors.

Cut back to reality, and you’ll only be disappointed at how useless most voice assistants are. It’s been twelve long years since Siri burst onto the scene, with Alexa and Google Assistant following years later. Despite years on the market, their capabilities remain limited and uninspiring. It’s time for voice assistants to level up.

Speech Recognition On An Arduino Nano?

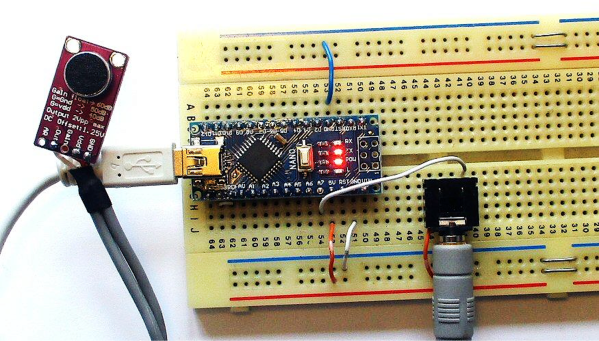

Like most of us, [Peter] had a bit of extra time on his hands during quarantine and decided to take a look back at speech recognition technology in the 1970s. Quickly, he started thinking to himself, “Hmm…I wonder if I could do this with an Arduino Nano?” We’ve all probably had similar thoughts, but [Peter] really put his theory to the test.

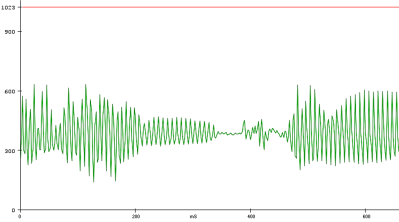

The hardware itself is pretty straightforward. There is an Arduino Nano to run the speech recognition algorithm and a MAX9814 microphone amplifier to capture the voice commands. However, the beauty of [Peter’s] approach, lies in his software implementation. [Peter] has a bit of an interplay between a custom PC program he wrote and the Arduino Nano. The learning aspect of his algorithm is done on a PC, but the implementation is done in real-time on the Arduino Nano, a typical approach for really any machine learning algorithm deployed on a microcontroller. To capture sample audio commands, or utterances, [Peter] first had to optimize the Nano’s ADC so he could get sufficient sample rates for speech processing. Doing a bit of low-level programming, he achieved a sample rate of 9ksps, which is plenty fast for audio processing.

To analyze the utterances, he first divided each sample utterance into 50 ms segments. Think of dividing a single spoken word into its different syllables. Like analyzing the “se-” in “seven” separate from the “-ven.” 50 ms might be too long or too short to capture each syllable cleanly, but hopefully, that gives you a good mental picture of what [Peter’s] program is doing. He then calculated the energy of 5 different frequency bands, for every segment of every utterance. Normally that’s done using a Fourier transform, but the Nano doesn’t have enough processing power to compute the Fourier transform in real-time, so Peter tried a different approach. Instead, he implemented 5 sets of digital bandpass filters, allowing him to more easily compute the energy of the signal in each frequency band.

The energy of each frequency band for every segment is then sent to a PC where a custom-written program creates “templates” based on the sample utterances he generates. The crux of his algorithm is comparing how closely the energy of each frequency band for each utterance (and for each segment) is to the template. The PC program produces a .h file that can be compiled directly on the Nano. He uses the example of being able to recognize the numbers 0-9, but you could change those commands to “start” or “stop,” for example, if you would like to.

[Peter] admits that you can’t implement the type of speech recognition on an Arduino Nano that we’ve come to expect from those covert listening devices, but he mentions small, hands-free devices like a head-mounted multimeter could benefit from a single word or single phrase voice command. And maybe it could put your mind at ease knowing everything you say isn’t immediately getting beamed into the cloud and given to our AI overlords. Or maybe we’re all starting to get used to this. Whatever your position is on the current state of AI, hopefully, you’ve gained some inspiration for your next project.

Stay Smarter Than Your Smart Speaker

Smart speakers have always posed a risk to privacy and security — that’s just the price we pay for getting instant answers to life’s urgent and not-so-urgent questions the moment they arise. But it seems that many owners of the 76 million or so smart speakers on the active install list have yet to wake up to the reality that this particular trick of technology requires a microphone that’s always listening. Always. Listening.

With so much of the world’s workforce now working from home due to the global SARS-CoV-2 pandemic, smart speakers have suddenly become a big risk for business, too — especially those where confidential conversations are as common and crucial as coffee.

Imagine the legions of lawyers out there, suddenly thrust from behind their solid-wood doors and forced to set up ramshackle sub rosa sanctuaries in their homes to discuss private matters with their equally out-of-sorts clients. How many of them don’t realize that their smart speaker bristles with invisible thorns, and is even vulnerable to threats outside the house? Given the recent study showing that smart speakers can and do activate accidentally up to 19 times per day, the prevalence of the consumer-constructed surveillance state looms like a huge crisis of confidentiality.

So what are the best practices of confidential work in earshot of these audio-triggered gadgets?

Smart Speakers “Accidentally” Listen Up To 19 Times A Day

In the spring of 2018, a couple in Portland, OR reported to a local news station that their Amazon Echo had recorded a conversation without their knowledge, and then sent that recording to someone in their contacts list. As it turned out, the commands Alexa followed came were issued by television dialogue. The whole thing took a sitcom-sized string of coincidences to happen, but it happened. Good thing the conversation was only about hardwood floors.

But of course these smart speakers are listening all the time, at least locally. How else are they going to know that someone uttered one of their wake words, or something close enough? It would sure help a lot if we could change the wake word to something like ‘rutabaga’ or ‘supercalifragilistic’, but they probably have ASICs that are made to listen for a few specific words. On the Echo for example, your only choices are “Alexa”, “Amazon”, “Echo”, or “Computer”.

So how often are smart speakers listening when they shouldn’t? A team of researchers at Boston’s Northeastern University are conducting an ongoing study to determine just how bad the problem really is. They’ve set up an experiment to generate unexpected activation triggers and study them inside and out.

Continue reading “Smart Speakers “Accidentally” Listen Up To 19 Times A Day”

Almond: Open Personal Assistant From Stanford

The current state of virtual personal assistants — Alexa, Cortana, Google, and Siri — leaves something to be desired. The speech recognition is mostly pretty good. However, customization options are very limited. Beyond that, many people are worried about the privacy of their data when using one of these assistants. Stanford Open Virtual Assistant Lab has rolled out Almond, which is open and is reported to have better privacy features.

Like most other virtual assistants, Almond has skills that determine what it can do. You can use Almond in a browser, on a Google phone, or as a command line application. It all lives on GitHub, so if you don’t like something you are free to fix it.

Continue reading “Almond: Open Personal Assistant From Stanford”

This Week In Security: KNOB, Old Scams Are New Again, 0-days, Backdoors, And More

Bluetooth is a great protocol. You can listen to music, transfer files, get on the internet, and more. A side effect of those many uses is that the specification is complicated and intended to cover many use cases. A team of researchers took a look at the Bluetooth specification, and discovered a problem they call the KNOB attack, Key Negotiation Of Bluetooth.

This is actually one of the simpler vulnerabilities to understand. Randomly generated keys are only as good as the entropy that goes into the key generation. The Bluetooth specification allows negotiating how many bytes of entropy is used in generating the shared session key. By necessity, this negotiation happens before the communication is encrypted. The real weakness here is that the specification lists a minimum entropy of 1 byte. This means 256 possible initial states, far within the realm of brute-forcing in real time.

This is actually one of the simpler vulnerabilities to understand. Randomly generated keys are only as good as the entropy that goes into the key generation. The Bluetooth specification allows negotiating how many bytes of entropy is used in generating the shared session key. By necessity, this negotiation happens before the communication is encrypted. The real weakness here is that the specification lists a minimum entropy of 1 byte. This means 256 possible initial states, far within the realm of brute-forcing in real time.

The attack, then, is to essentially man-in-the-middle the beginning of a Bluetooth connection, and force that entropy length to a single byte. That’s essentially it. From there, a bit of brute forcing results in the Bluetooth session key, giving the attacker complete access to the encrypted stream.

One last note, this isn’t an implementation vulnerability, it’s a specification vulnerability. If your device properly implements the Bluetooth protocol, it’s vulnerable.

CenturyLink Unlinked

You may not be familiar with CenturyLink, but it maintains one of the backbone fiber networks serving telephone and internet connectivity. On December 2018, CenturyLink had a large outage affecting its fiber network, most notable disrupting 911 services for many across the United States for 37 hours. The incident report was released on Monday, and it’s… interesting.

Continue reading “This Week In Security: KNOB, Old Scams Are New Again, 0-days, Backdoors, And More”