Virtual Reality in woodworking sounds like a recipe for disaster—or at least a few missing fingers. But [The Swedish Maker] decided to put this concept to the test, diving into a full woodworking project while wearing a Meta Quest 3. You can check out the full experiment here, but let’s break down the highs, lows, and slightly terrifying moments of this unconventional build.

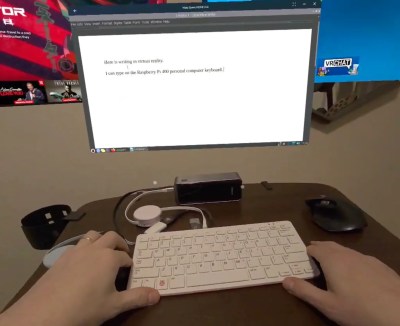

The plan: complete a full furniture build while using the VR headset for everything—from sketching ideas to cutting plywood. The Meta Quest 3’s passthrough mode provided a semi-transparent AR view, allowing [The Swedish Maker] to see real-world tools while overlaying digital plans. Sounds futuristic, right? Well, the reality was more like a VR fever dream. Depth perception was off, measuring was a struggle, and working through a screen-delayed headset was nauseating at best. Yet, despite the warped visuals, the experiment uncovered some surprising advantages—like the ability to overlay PDFs in real-time without constantly running back to a computer.

So is VR useful to the future of woodworking? If you’re a woodworking novice, you might steer clear from VR and read up on the basics first. For the more seasoned: maybe, when headsets evolve beyond their current limitations. For now, it’s a hilarious, slightly terrifying experiment that might just inspire the next wave of augmented reality workshops. If you’re more into electronics, we did cover the possibilities with AR some time ago. We’re curious to know your thoughts on this development in the comments!

Continue reading “Chop, Chop, Chop: Trying Out VR For Woodworking”