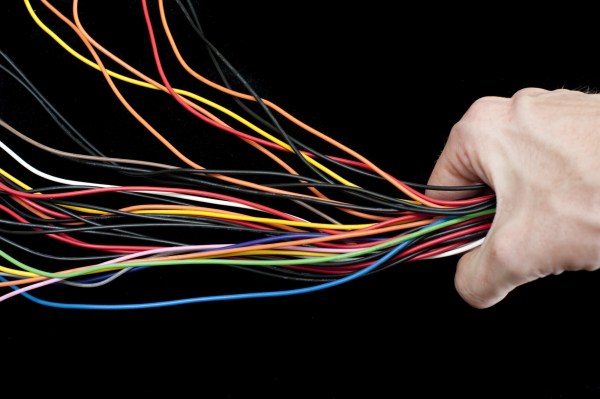

Ah, soldering. It’s great for sticking surface mount parts to a PCB, and it’s really great for holding component legs in a plated through-hole. It also does a pretty great job of holding two spliced wires together.

With that said, it can be a bit of a fussy process. There are all manner of YouTube videos and image tutorials on the “properest” way to achieve this job. Maybe it’s the classic Lineman’s Splice, maybe it’s some NASA-approved method, or maybe it’s one of those ridiculous ones where you braid all the copper strands together, solder it all up, and then realize you’ve forgotten to put the heat shrink on first.

Sure, soldering’s all well and good. But what about some of the other ways to join a pair of wires?

Continue reading “Ask Hackaday: What Do You Do When You Can’t Solder?”