Did [TobiasWeis] build a mirror that’s better at reflecting his image? No, he did not. Did he build a mirror that’s better at reflecting himself? We think so. In addition to these philosophical enhancements, the build itself is really nice.

The display is a Samsung LCD panel with its inconvenient plastic husk torn away and replaced with a new frame made of wood. We like the use of quickly made 3D printed brackets to hold the wood at a perfect 90 degrees while drilling the holes for the butt joints. Some time with glue, band clamps, and a few layers of paint and the frame was ready. He tried the DIY route for the two-way mirror, but decided to just order a glass one after some difficulty with bubbles and scratches.

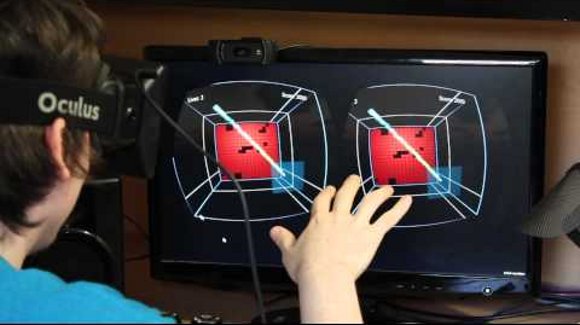

A smart mirror needs an interface, but unless you own stock in Windex (glass cleaner), it is nice to have a way to keep it from turning into an OCD sufferer’s worst nightmare. This is, oddly, the first justification for the Leap Motion controller we can really buy into. Now, using the mirror does not involve touching the screen. [Tobias] initially thought to use a Raspberry Pi, but instead opted for a mini-computer that had been banging around a closet for a year or two. It had way more go power, and wouldn’t require him to hack drivers for the Leap Motion on the ARM version of Linux.

After that is was coding and installing modules. He goes into a bit of detail about it as well as his future plans. Our favorite is programming the mirror to show a scary face if you say “bloody mary” three times in a row.