The gang at Bitcraze is at it again, this time developing Leap Motion control for their Crazyflie quadcopter, as well as releasing a Kinect-driven autopilot proof of concept. If you haven’t seen the Crazyflie before, you may not realize how compact it is: 90mm motor to motor and only 19 grams.

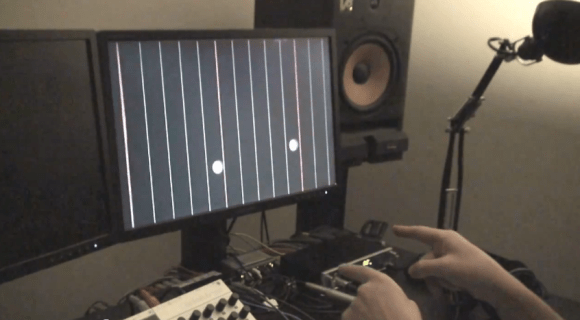

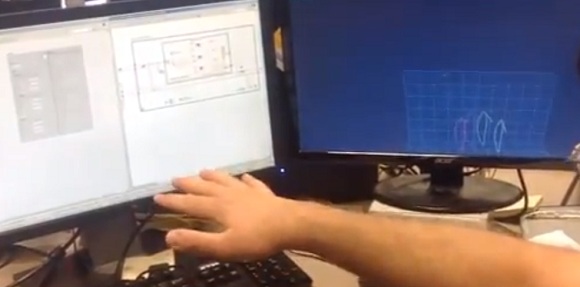

As far as we can tell, the Crazyflie still needs a PC to control it, so the Leap and Kinect are natural followups. Hand control with the Leap Motion is what you’d expect: just imagine your open palm controlling it like a marionette, with the height of your hand dictating thrust. The Kinect setup looks the most promising. The guys strapped a red ball to the Crazyflie that provides a trackable object against a white backdrop. The Kinect then monitors the quadcopter while a user steers via mouse clicks. Separate PID controllers correct the roll, pitch and thrust to reposition the Crazyflie from its current coordinates to a new setpoint chosen by a click or a drag. Videos of both Leap and Kinect piloting are below.

Tight on cash but still want to take to the skies? We have two rubber-band-powered devices from earlier this week: the Ornithopter and the hilariously brilliant GoPro Slingshot.